Ishan Sheth (imsheth)

The Hows - Apache Airflow 2 using DockerOperator with node.js and Gitlab container registry on Ubuntu 20

The Hows - Apache Airflow 2 using DockerOperator with node.js and Gitlab container registry on Ubuntu 20

Jul 25, 2021

The motivation for writing this post is in the hope that it helps others save lots of time, energy and nerve wracking phases that can lead to self doubt which I had extensively faced and would want others to avoid them altogether.

This post is focused on how to setup Apache Airflow 2 using DockerOperator with node.js and Gitlab container registry on Ubuntu 20

The relevant source code for the post can be found hereUbuntu 20.x.x

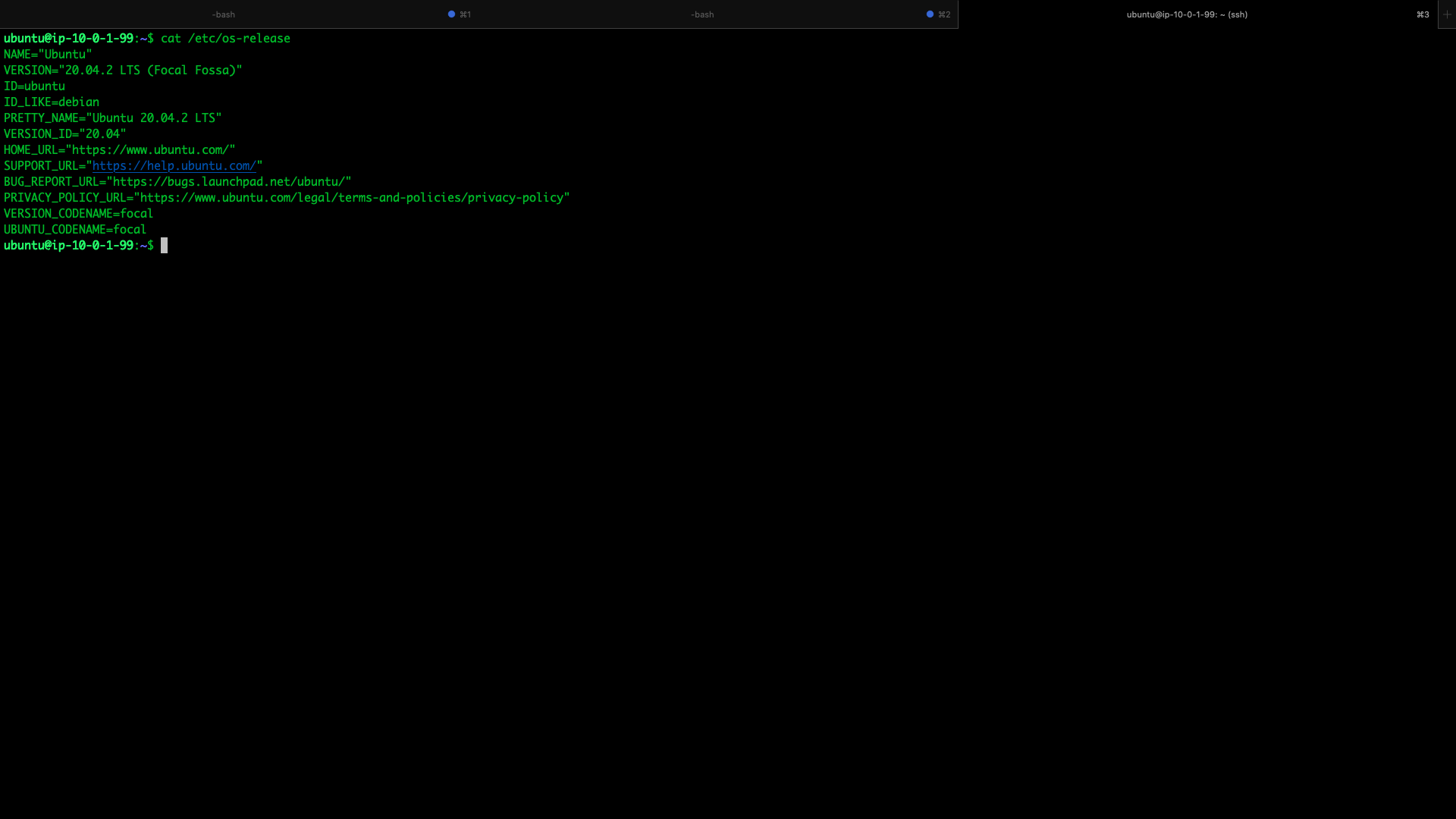

- Check the machine operating system, it should be Ubuntu 20.x.x to have the best shot at successful installation on the first attempt

- Our test machine operating system details (Ubuntu 20.04.2 LTS (Focal Fossa))

cat /etc/os-release

It is recommended to use the LTS version but not necessary

This completes Ubuntu check

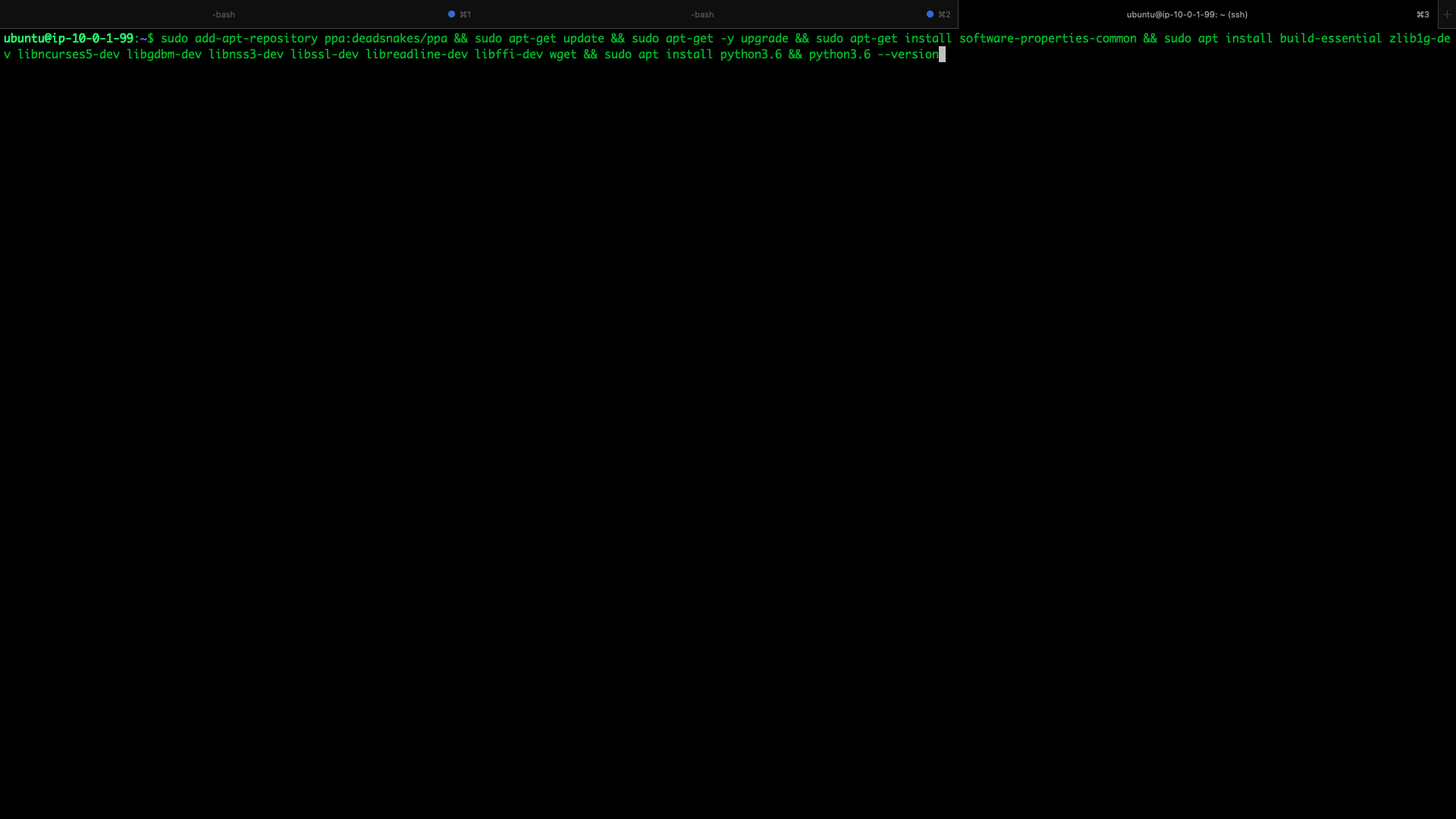

python 3.6

- Airflow works with specific versions of python, install python 3.6 as other versions aren't supported by airflow for DockerOperator though the documentation states otherwise

- python ppa installation

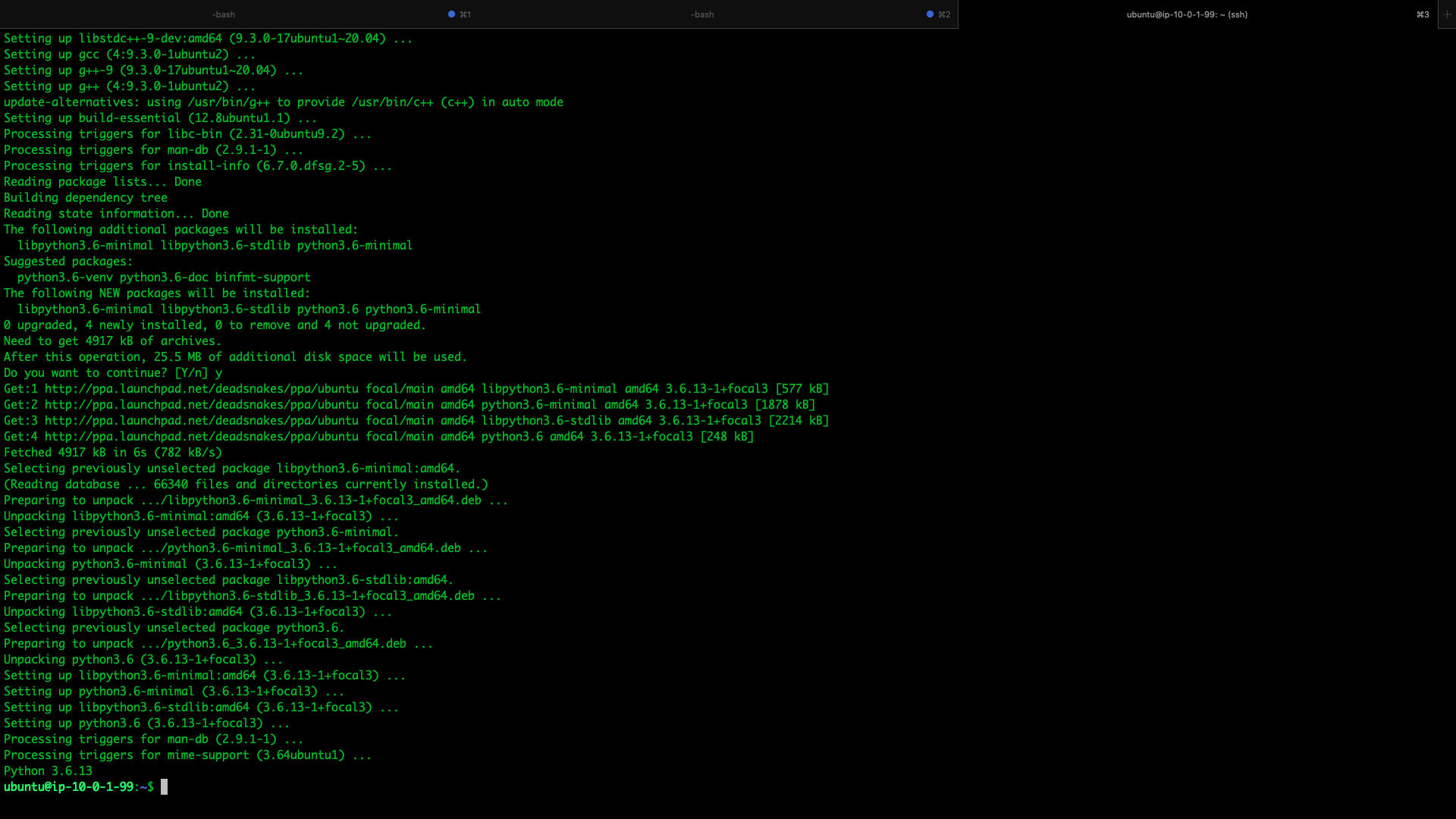

- Install python 3.6 and required packages

sudo add-apt-repository ppa:deadsnakes/ppa && sudo apt-get update && sudo apt-get -y upgrade && sudo apt-get install software-properties-common && sudo apt install build-essential zlib1g-dev libncurses5-dev libgdbm-dev libnss3-dev libssl-dev libreadline-dev libffi-dev wget && sudo apt install python3.6 && python3.6 --version

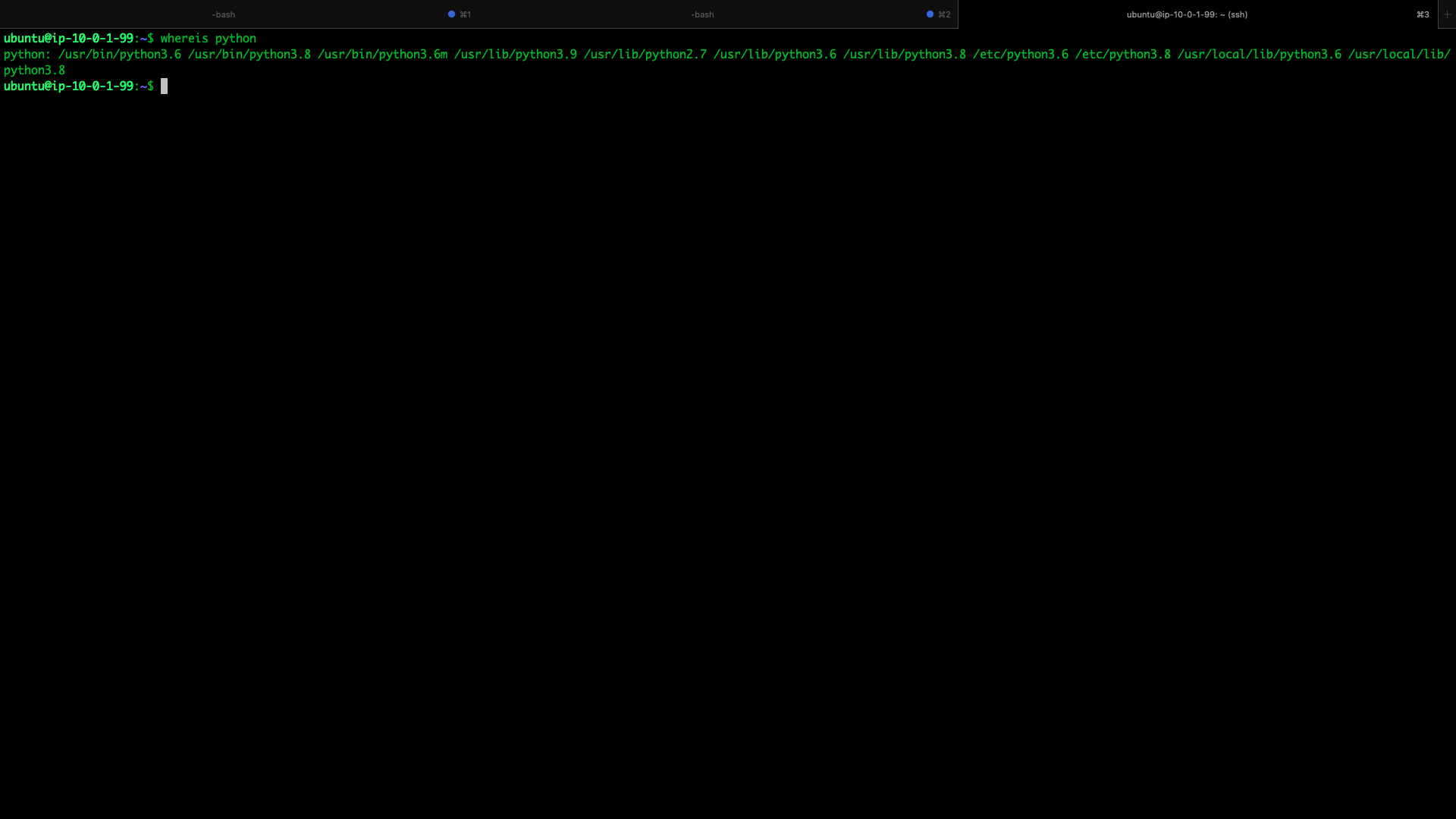

- Verify python 3.6 installation path

whereis python

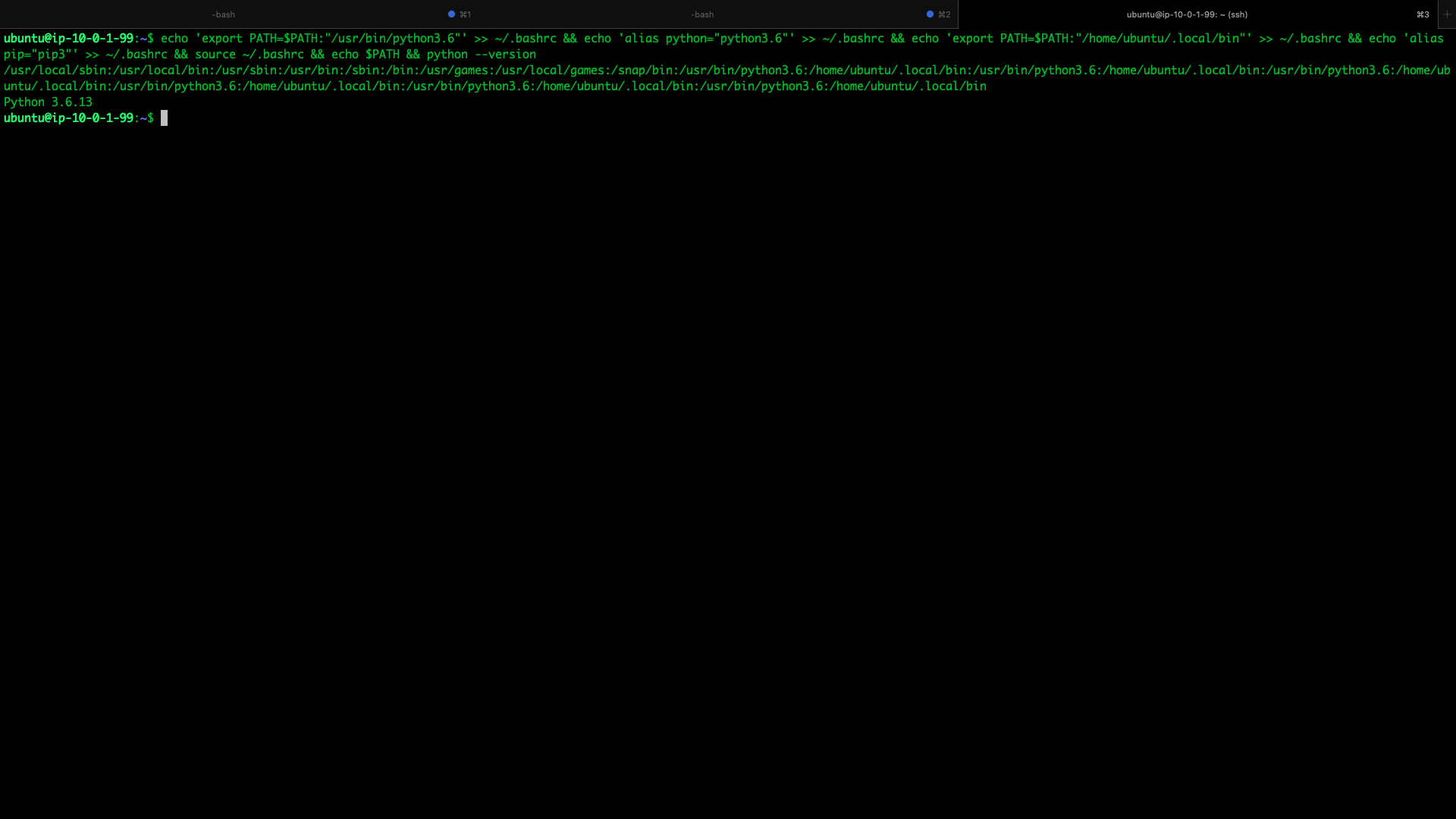

- Set environment/path/alias variables for python 3.6 & pip 20.2.4

- Setting path variable for python 3.6

- Set alias for python to point to python 3.6

- Set alias for python to point to python 3.6 in .bashrc

echo 'export PATH=$PATH:"/usr/bin/python3.6"' >> ~/.bashrc && echo 'alias python="python3.6"' >> ~/.bashrc && echo 'export PATH=$PATH:"/home/ubuntu/.local/bin"' >> ~/.bashrc && echo 'alias pip="pip3"' >> ~/.bashrc && source ~/.bashrc && echo $PATH && python --version

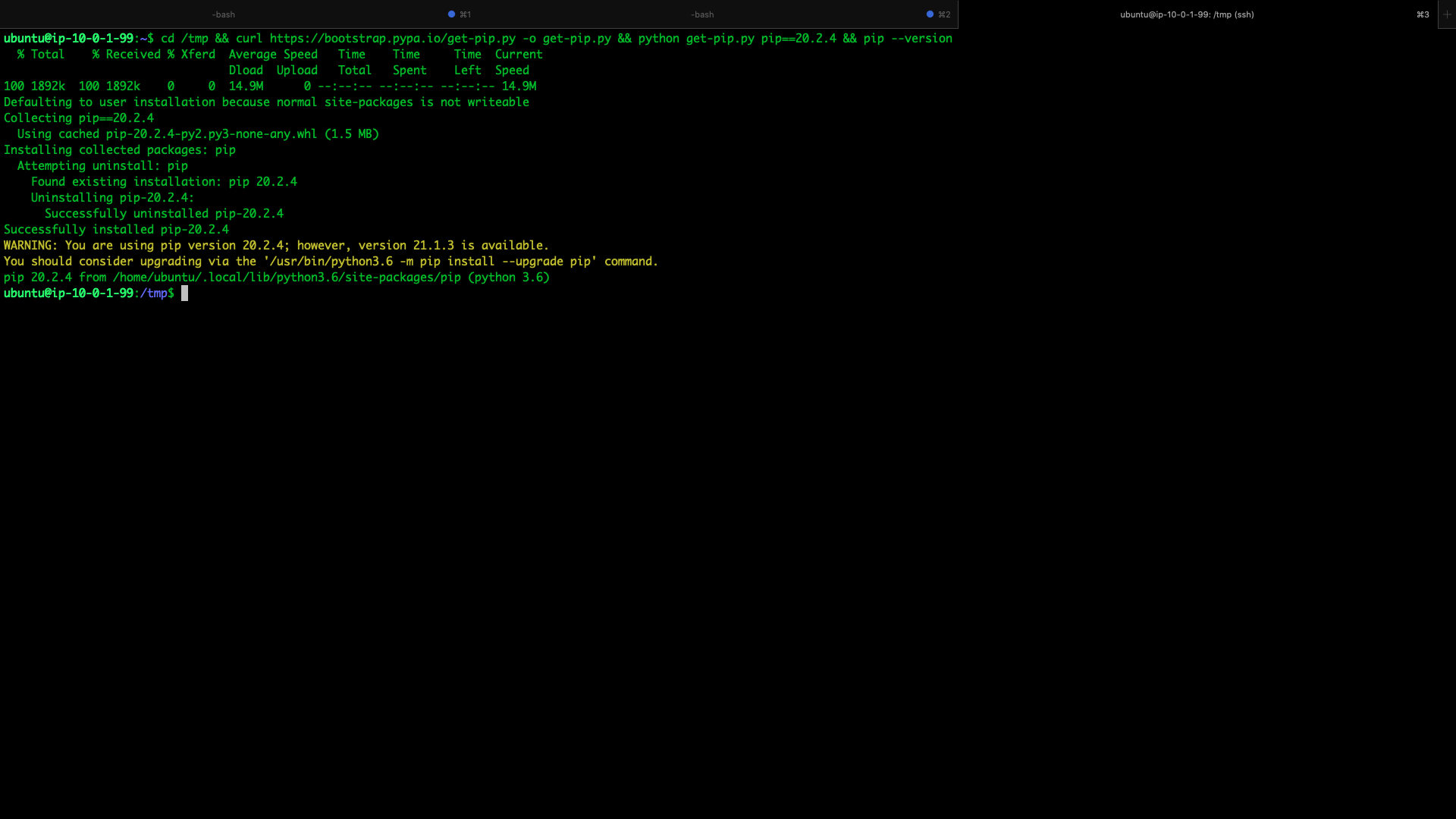

- pip 20.2.4

cd /tmp && curl https://bootstrap.pypa.io/get-pip.py -o get-pip.py && python get-pip.py pip==20.2.4 && pip --version

Airflow 2.0.1

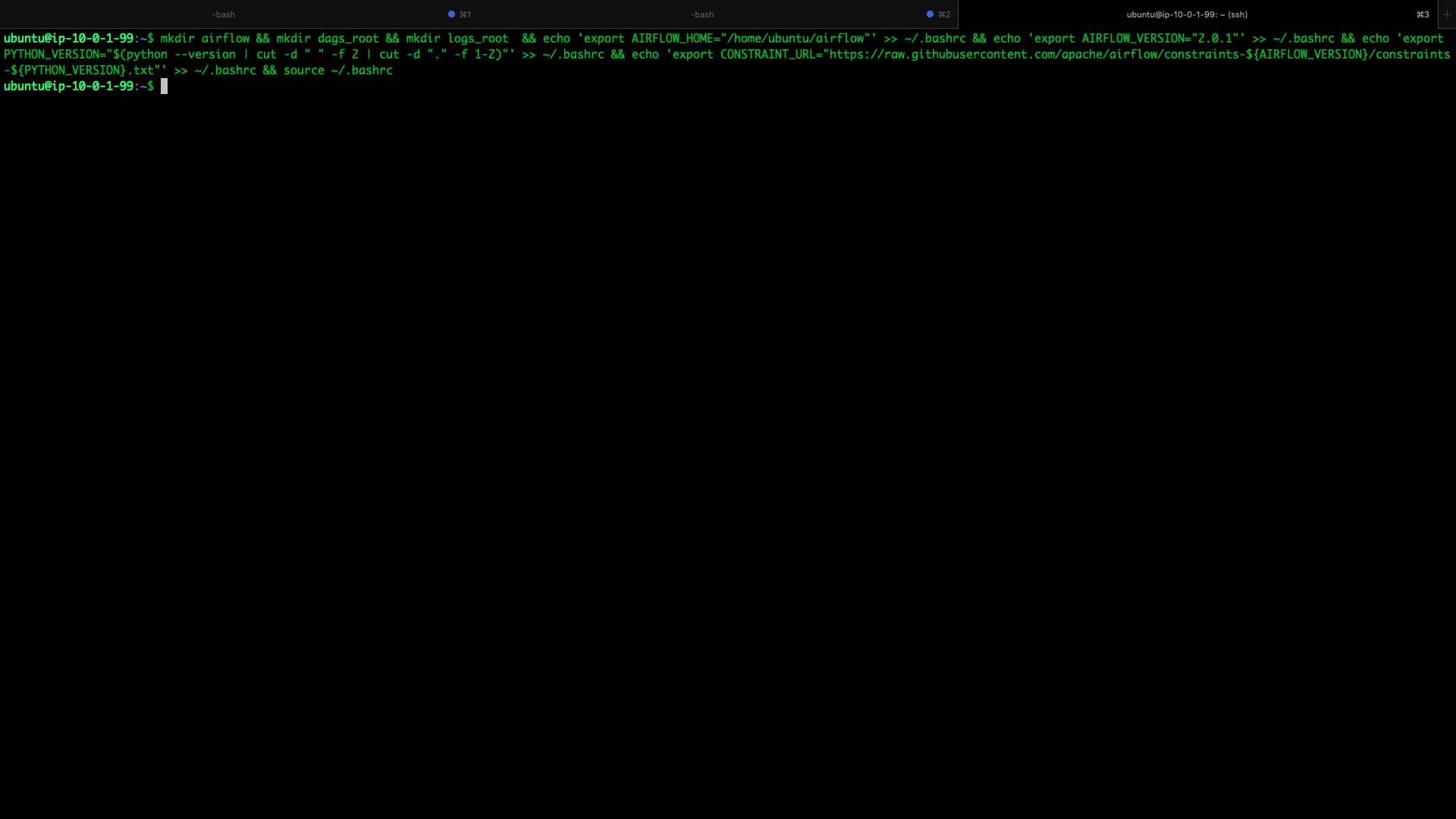

- Create directories and set environment variables

mkdir airflow && mkdir dags_root && mkdir logs_root && echo 'export AIRFLOW_HOME="/home/ubuntu/airflow"' >> ~/.bashrc && echo 'export AIRFLOW_VERSION="2.0.1"' >> ~/.bashrc && echo 'export PYTHON_VERSION="$(python --version | cut -d " " -f 2 | cut -d "." -f 1-2)"' >> ~/.bashrc && echo 'export CONSTRAINT_URL="https://raw.githubusercontent.com/apache/airflow/constraints-${AIRFLOW_VERSION}/constraints-${PYTHON_VERSION}.txt"' >> ~/.bashrc && source ~/.bashrc

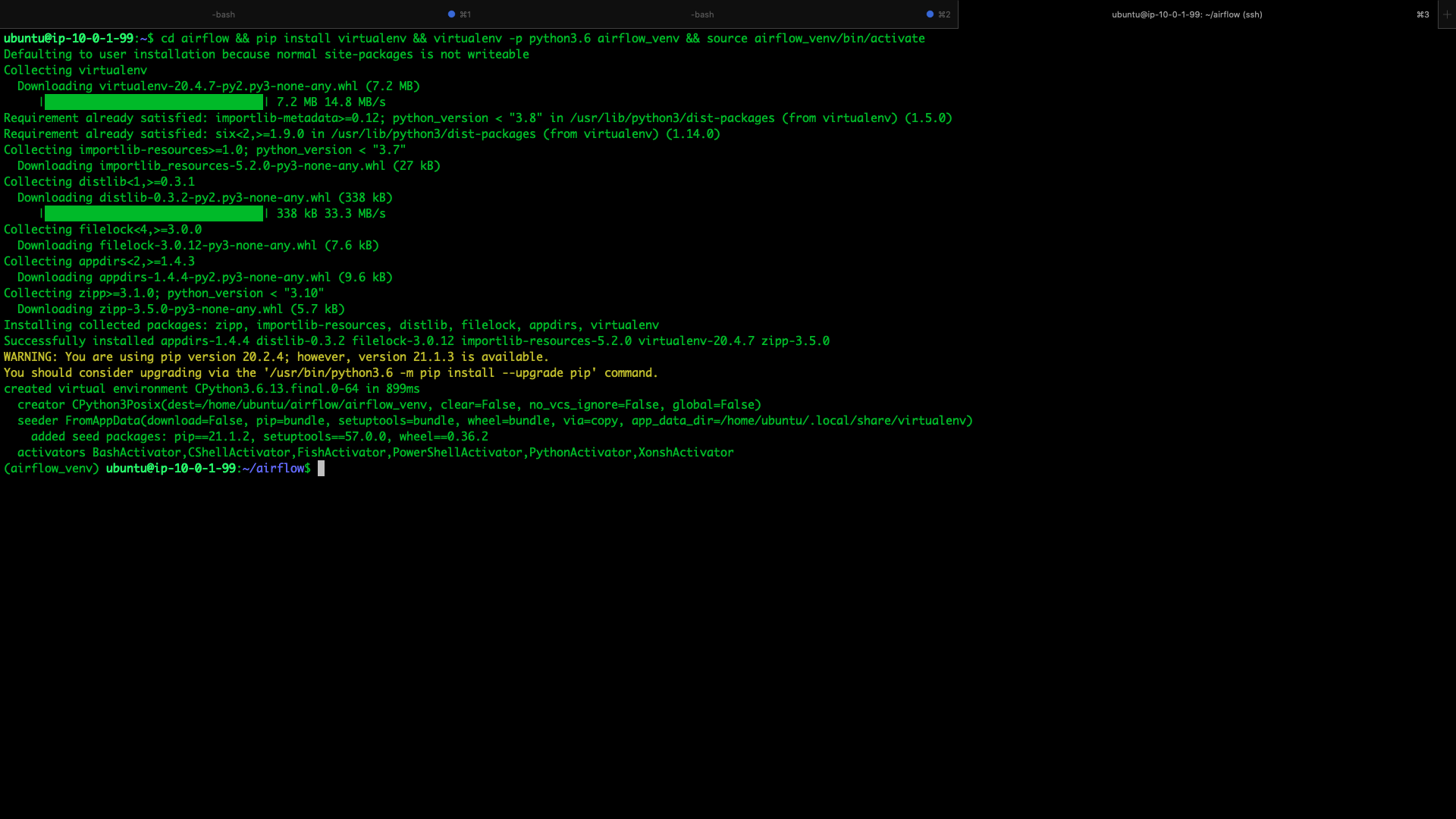

- Create virtual environment, the installation causes issues with gunicorn if not using one

cd airflow && pip install virtualenv && virtualenv -p python3.6 airflow_venv && source airflow_venv/bin/activate

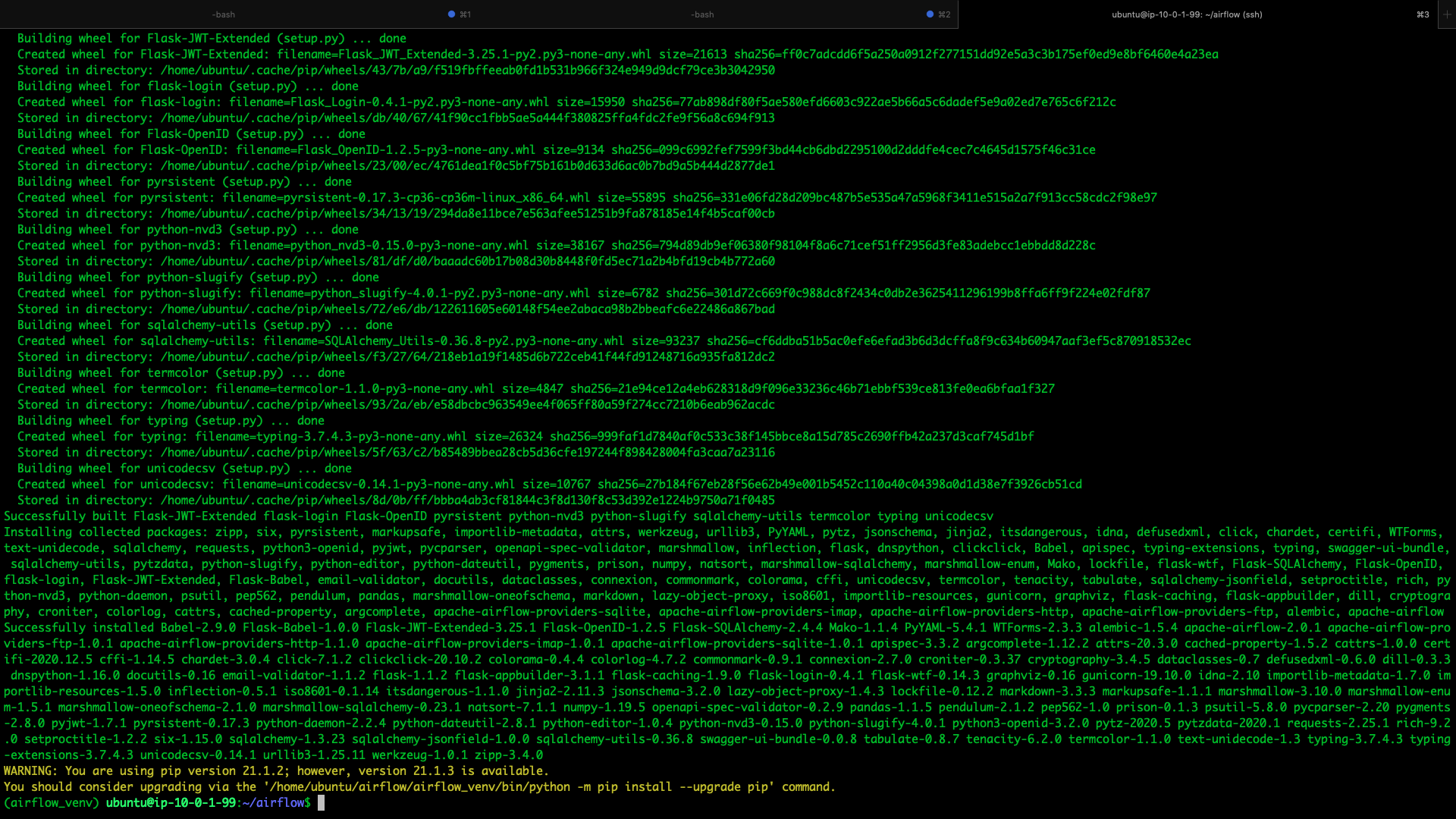

- Install Apache Airflow 2.0.1

pip install "apache-airflow==${AIRFLOW_VERSION}" --constraint "${CONSTRAINT_URL}"

- This completes Apache Airflow 2 installation

Postgres 12

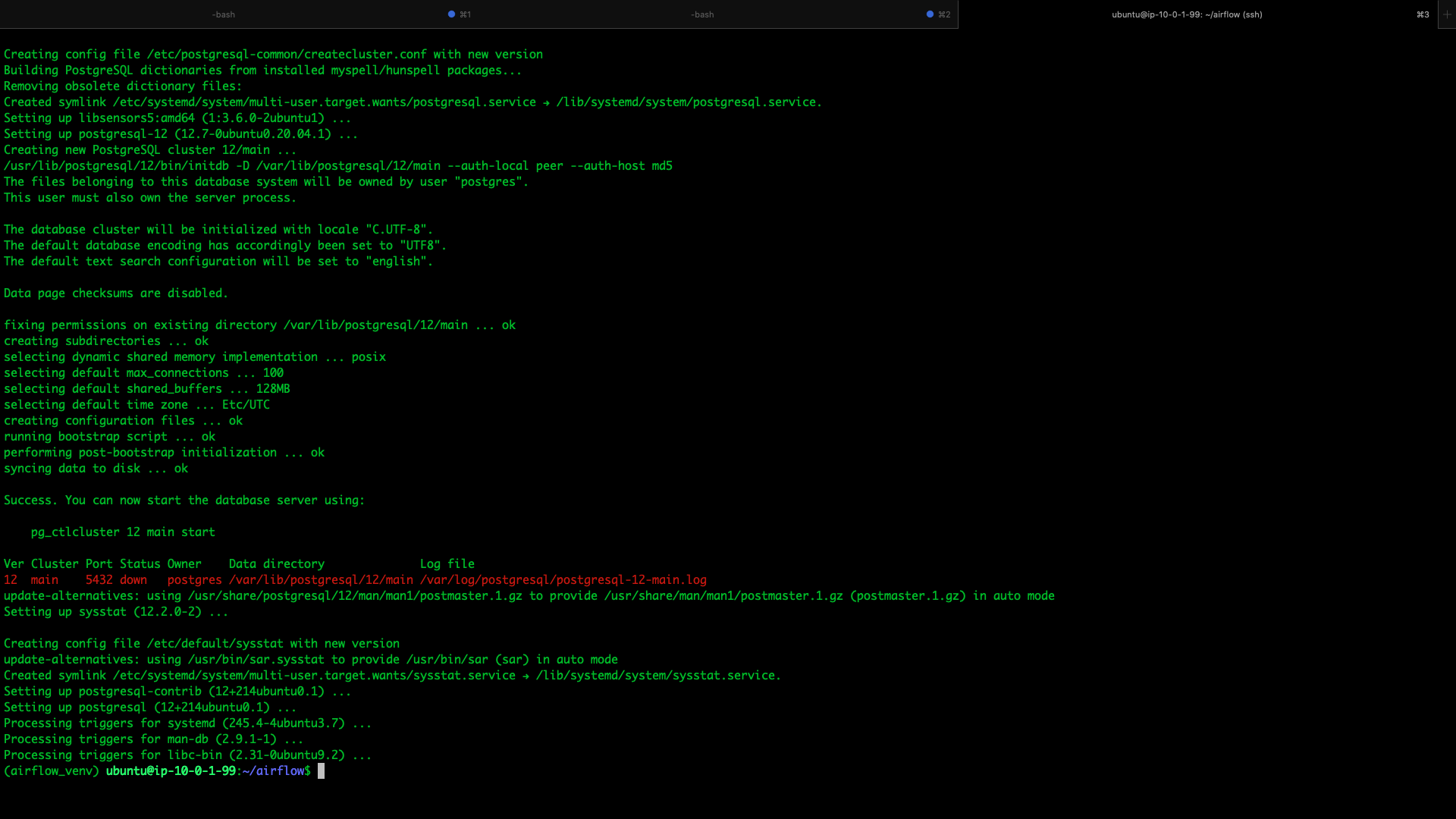

- Install Postgres 12

sudo apt update && sudo apt install postgresql postgresql-contrib

Your data directory would be

/var/lib/postgresql/12/mainand log file would be/var/log/postgresql/postgresql-12-main.logSetup Postgres

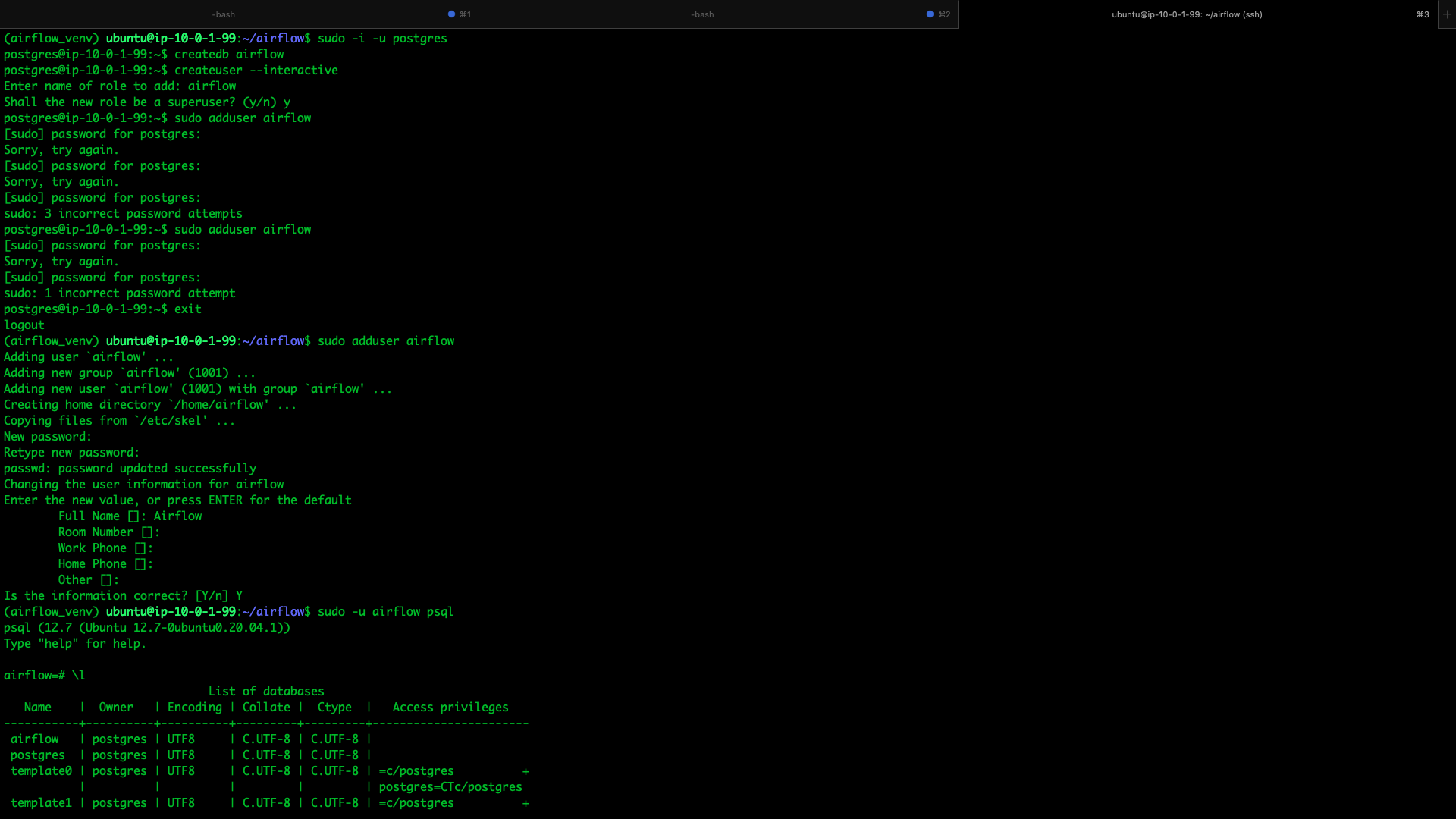

sudo -i -u postgrescreatedb airflow

createuser --interactive## Enter name of role to add: airflow

## Shall the new role be a superuser? (y/n)yexit

## Add airflow usersudo adduser airflowsudo -u airflow psql\l\c airflow\dt

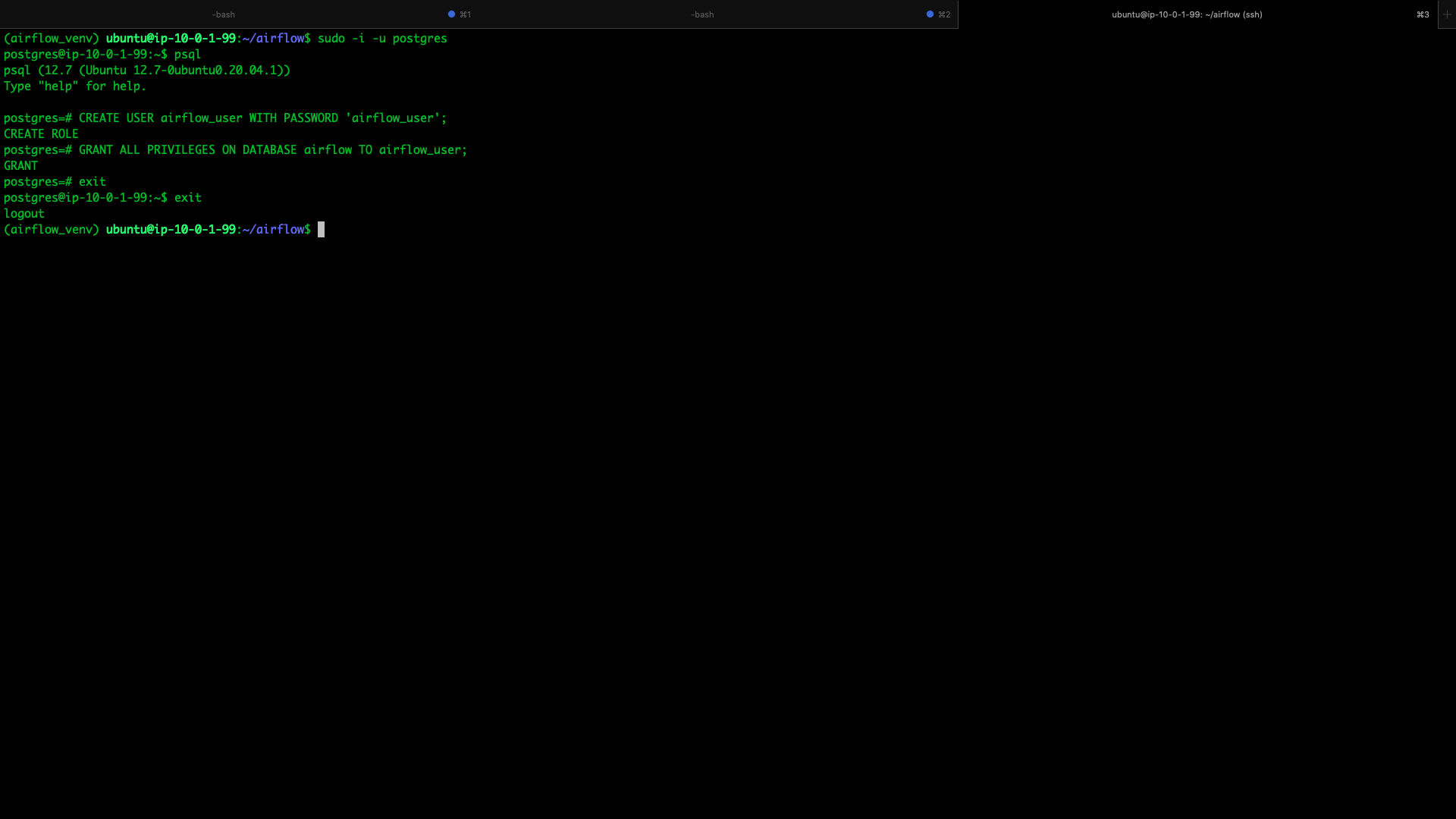

sudo -i -u postgrespsqlCREATE USER airflow_user WITH PASSWORD 'airflow_user';GRANT ALL PRIVILEGES ON DATABASE airflow TO airflow_user;exit

exit

- This completes Postgres installation and setup

Airflow 2.0.1 config

- Change the config as per requirement

- Defaults are available at https://airflow.apache.org/docs/apache-airflow/stable/configurations-ref.html

vim /home/ubuntu/airflow/airflow.cfgRecommended for optimum usage

dags_folder = /home/ubuntu/dags_root

executor = LocalExecutor

sql_alchemy_conn = postgresql+psycopg2://airflow_user:airflow_user@localhost:5432/airflow

base_log_folder = /home/ubuntu/logs_root

logging_level = DEBUG

auth_backend = airflow.api.auth.backend.default

enable_xcom_pickling = True

job_heartbeat_sec = 120

min_file_process_interval = 120

scheduler_zombie_task_threshold = 1800

Optional but useful

expose_config = True

base_url = http://continental.thehightable.org:8080

hostname_callable = socket:gethostname

worker_autoscale = 16,12

broker_url = amqp://guest:guest@localhost:5672//

celery_result_backend = amqp://guest:guest@localhost:5672//

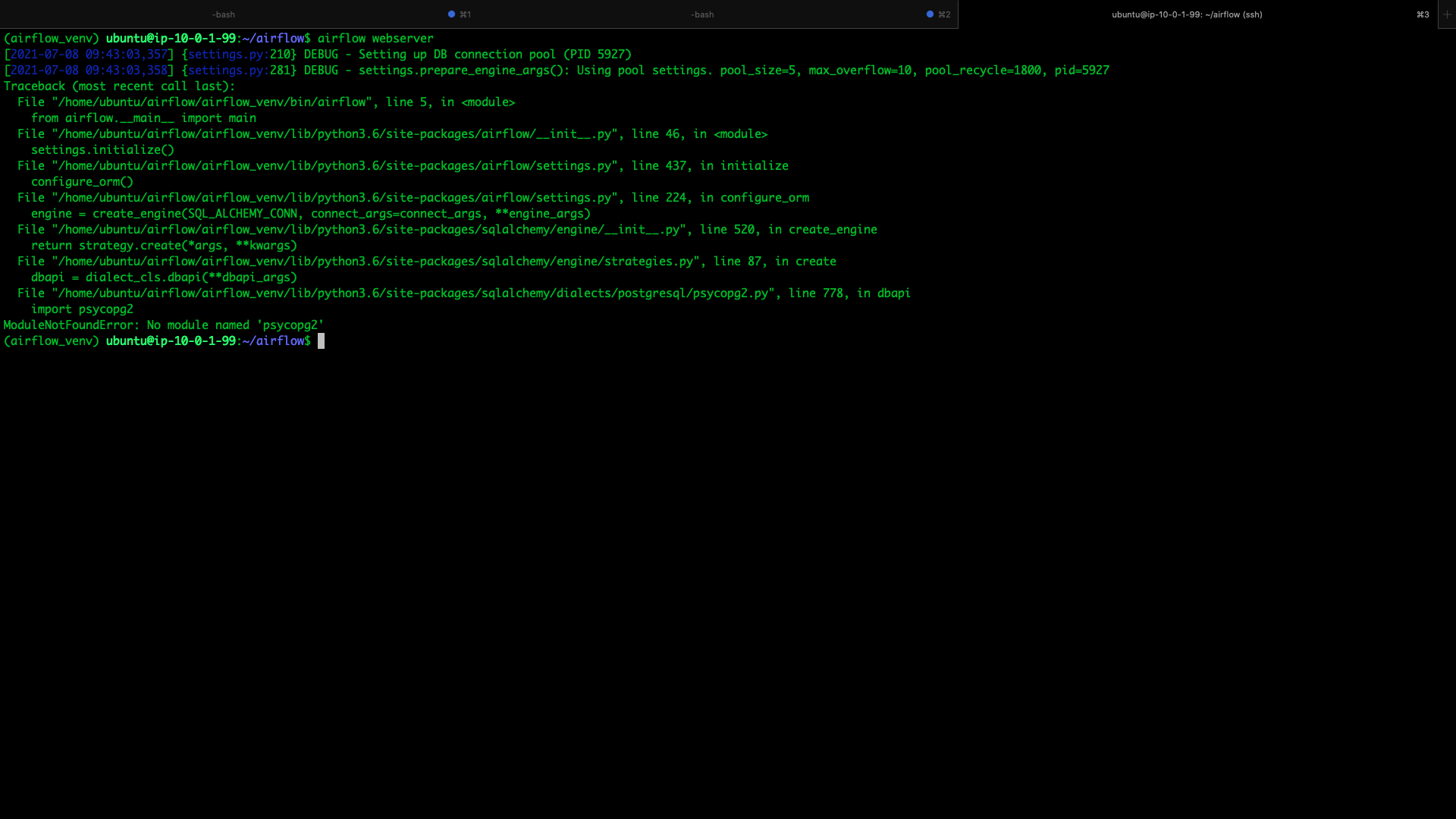

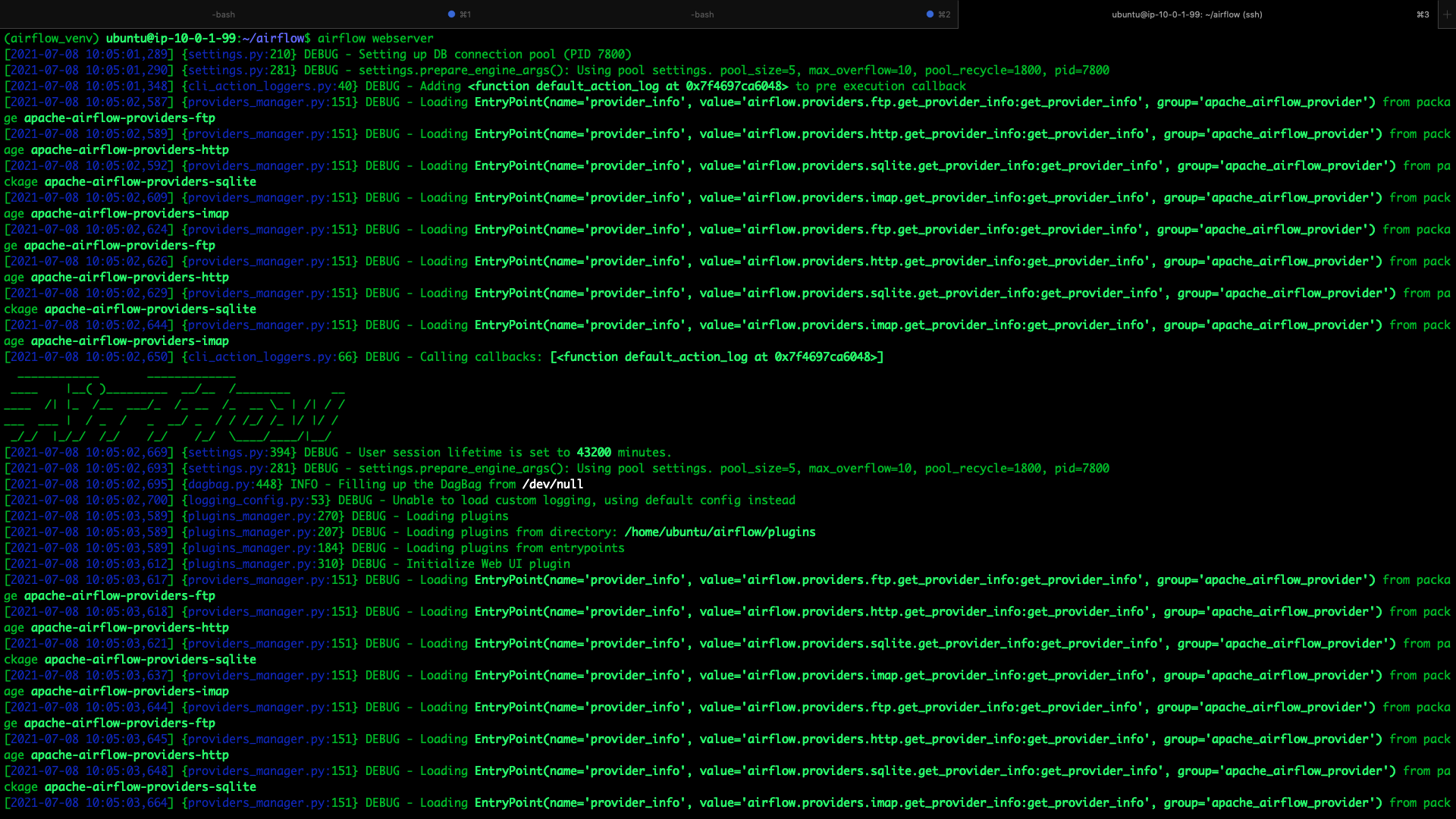

- Boot up webserver

airflow webserver

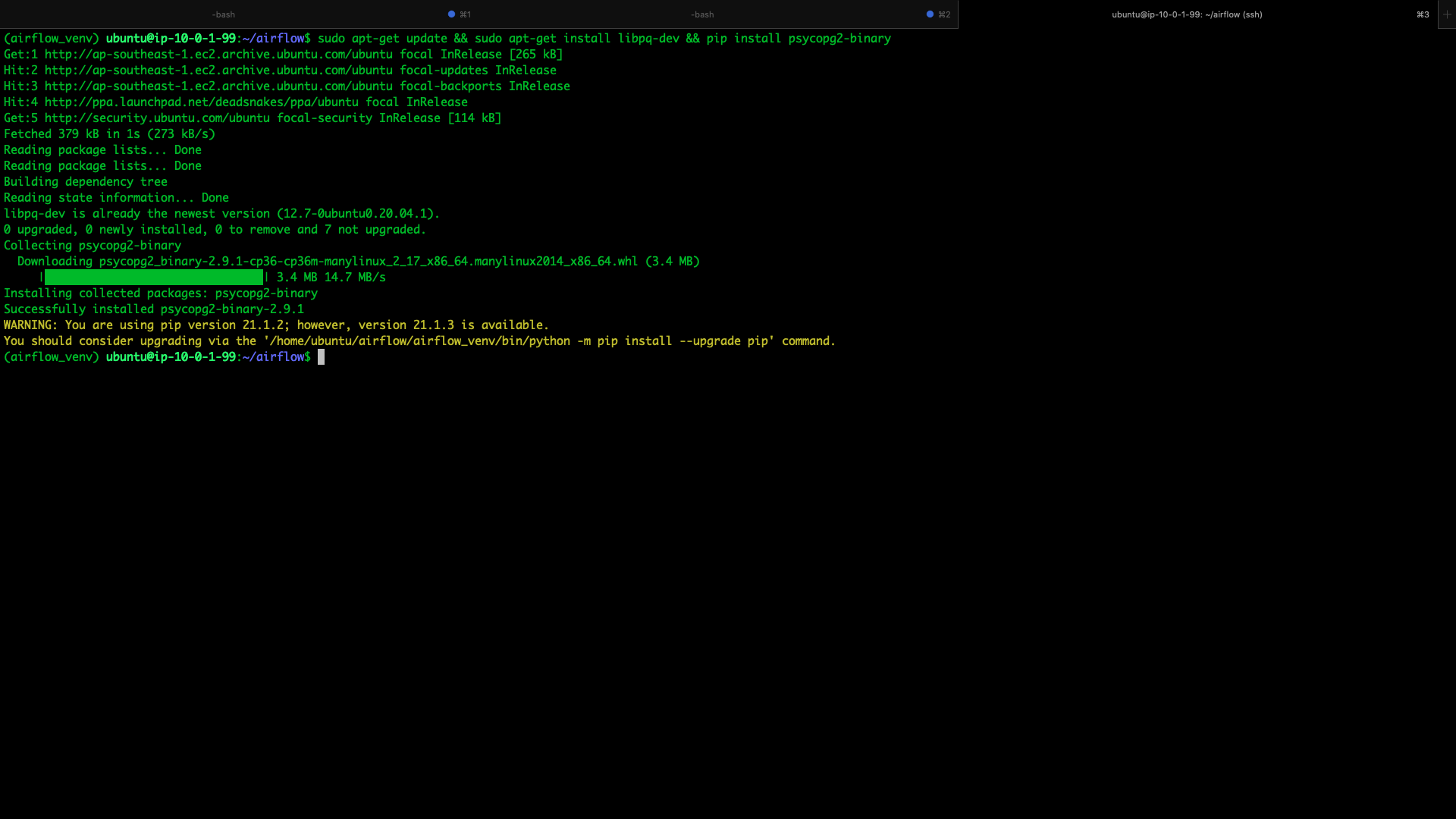

sudo apt-get update && sudo apt-get install libpq-dev && pip3 install psycopg2-binary

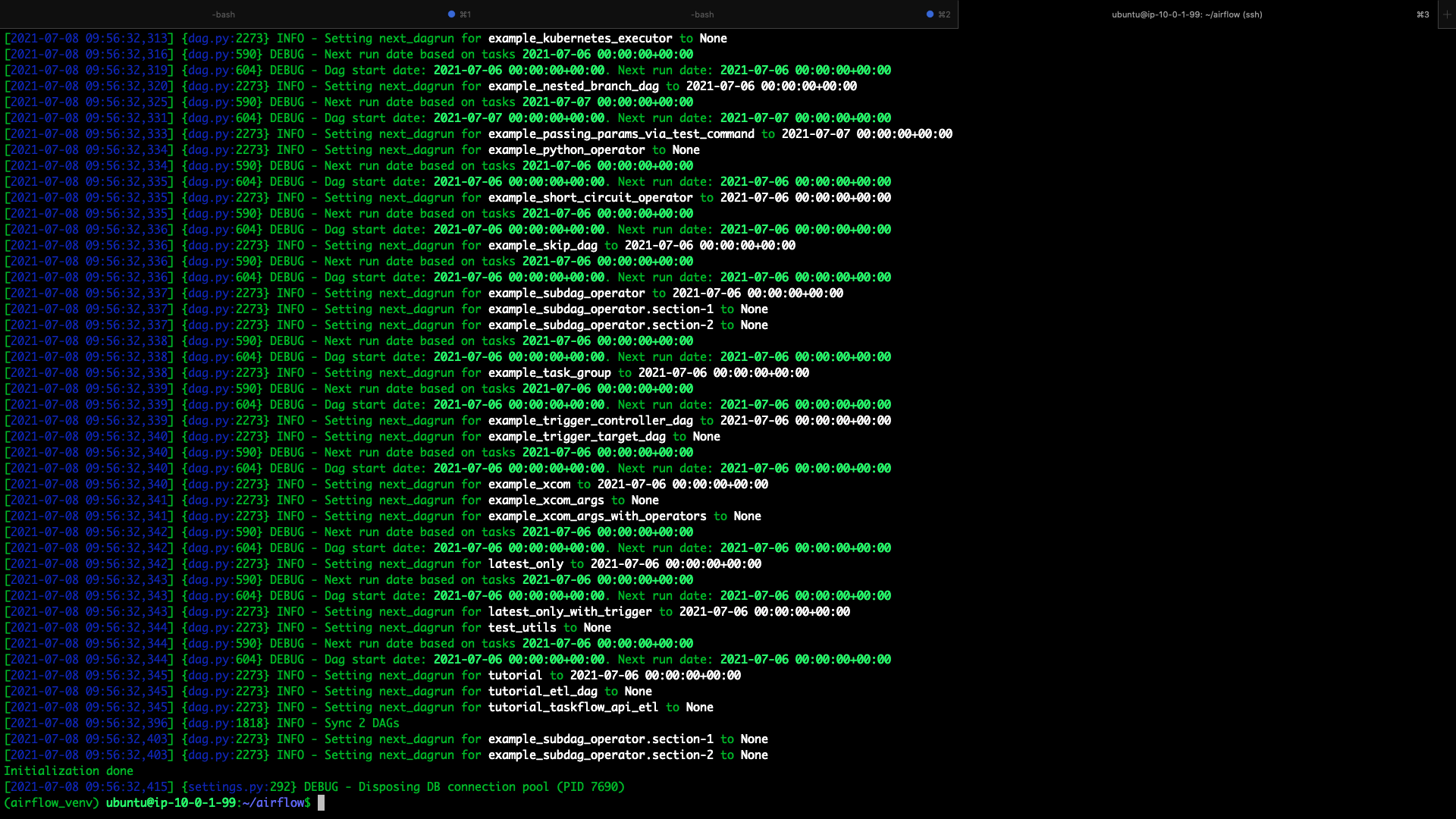

- Initialize the database (This has to be done only once you set your config else it'll take the settings from config before you edited the config)

airflow db init

- Create airflow user

## username john## password wickairflow users create \ --username john \ --firstname John \ --lastname Wick \ --role Admin \ --email john@thehightable.org

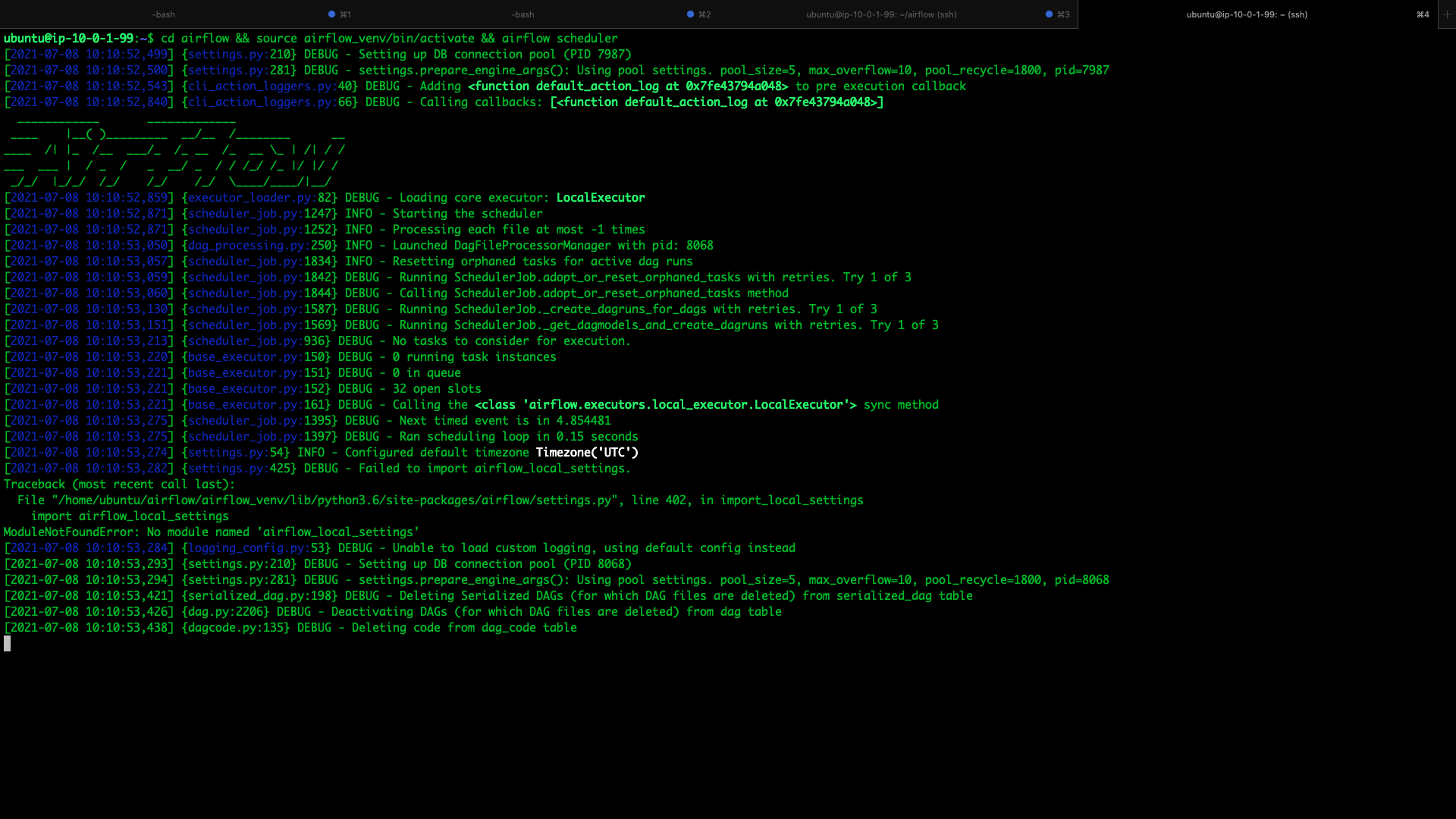

- Boot up the scheduler (from a new ssh session)

cd airflow && source airflow_venv/bin/activate && airflow scheduler

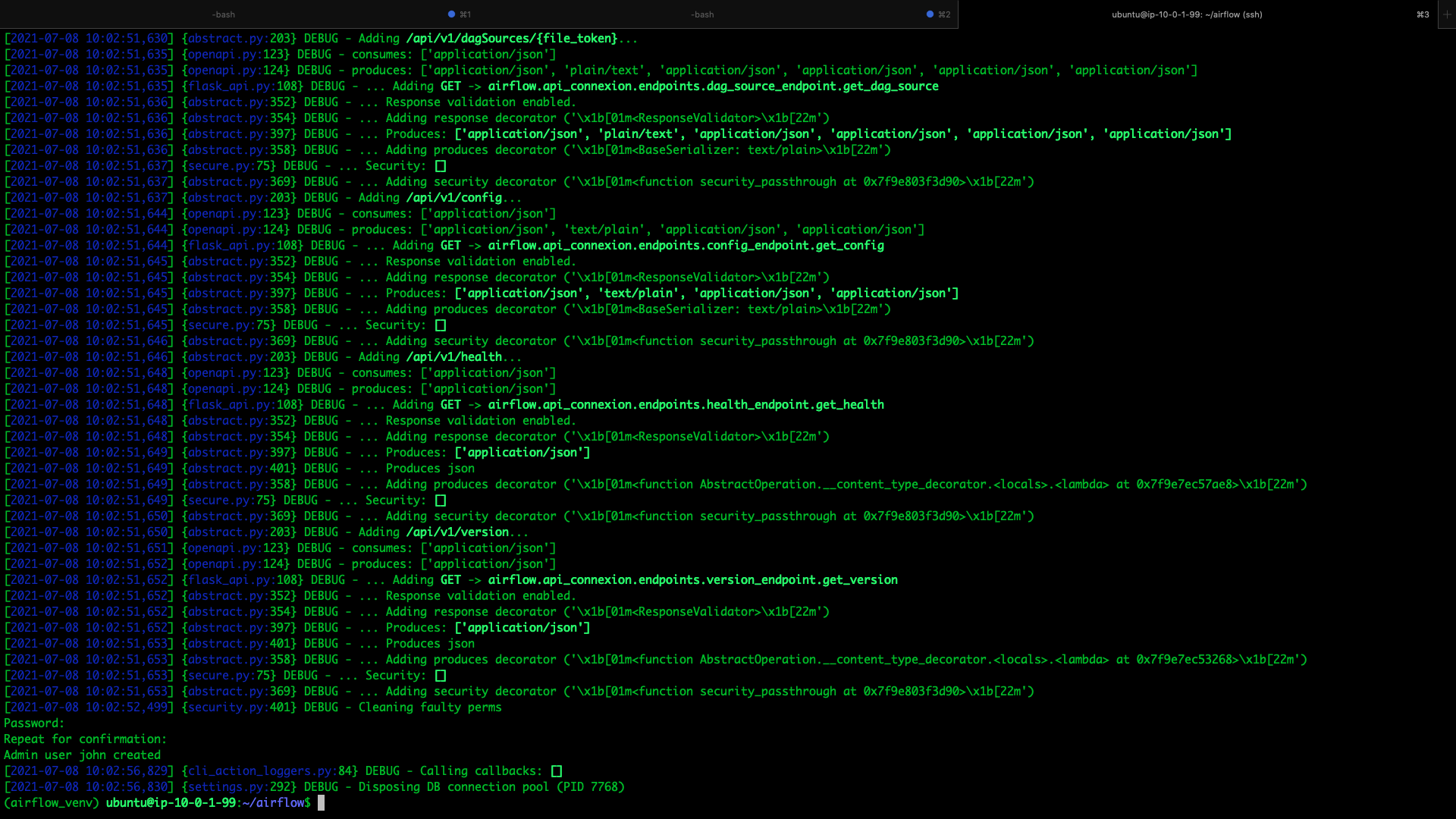

- Boot up the webserver (from a same session as of until point 5)

airflow webserver

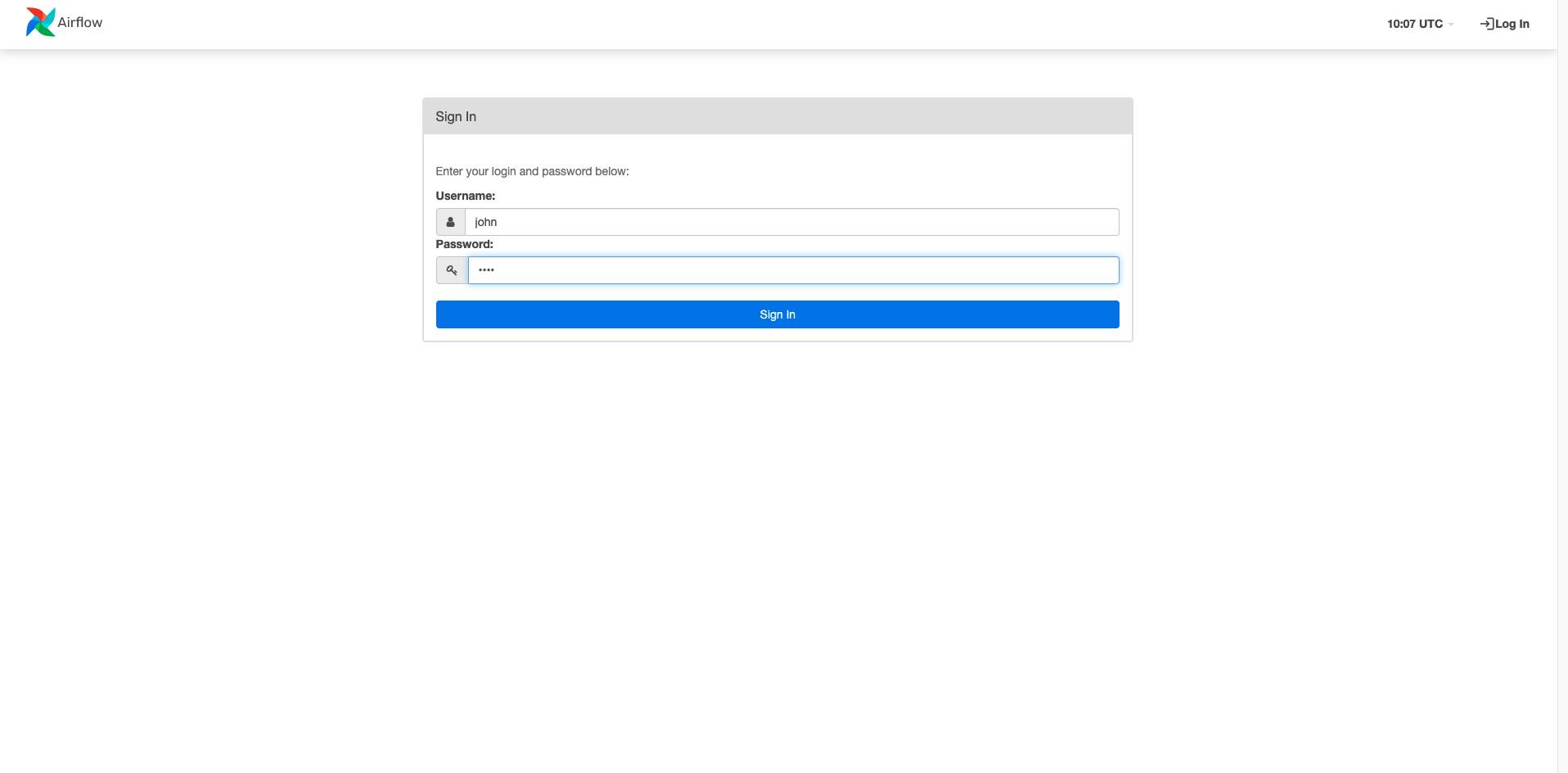

Login at the login prompt @IP:8080/localhost:8080 (Be sure that the port 8080 is open)

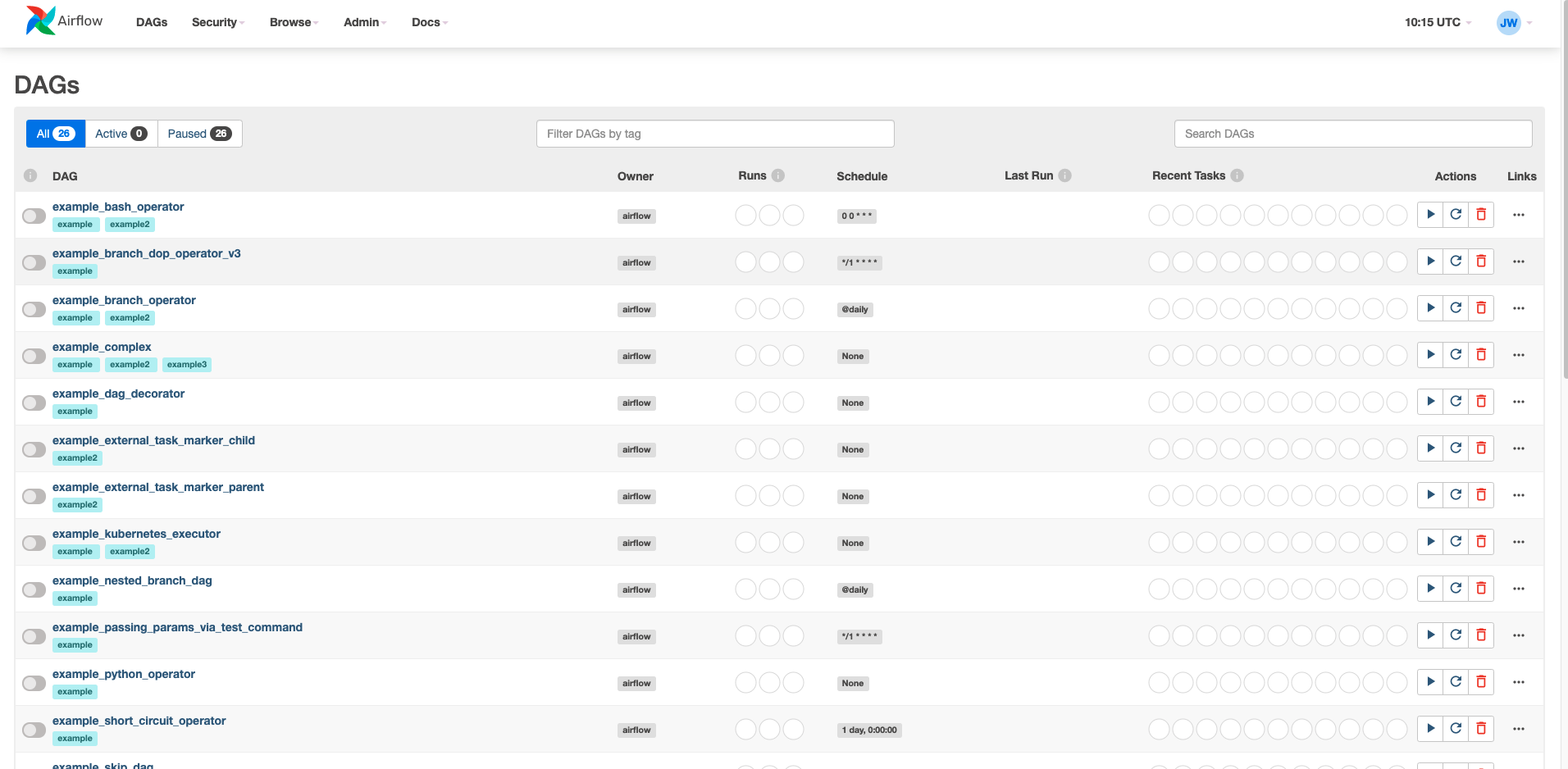

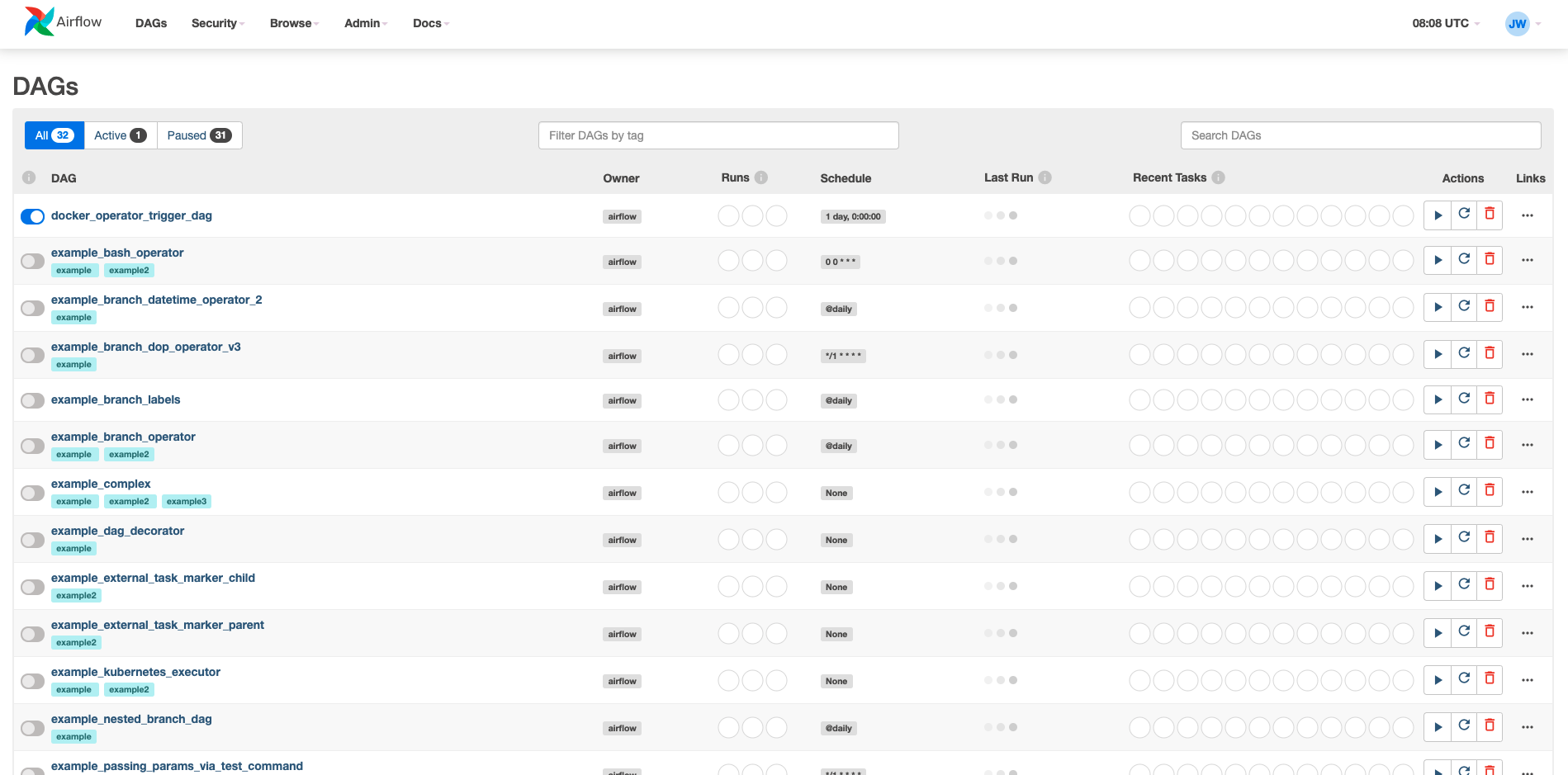

Airflow dashboard

This completes Apache Airflow 2 configuration

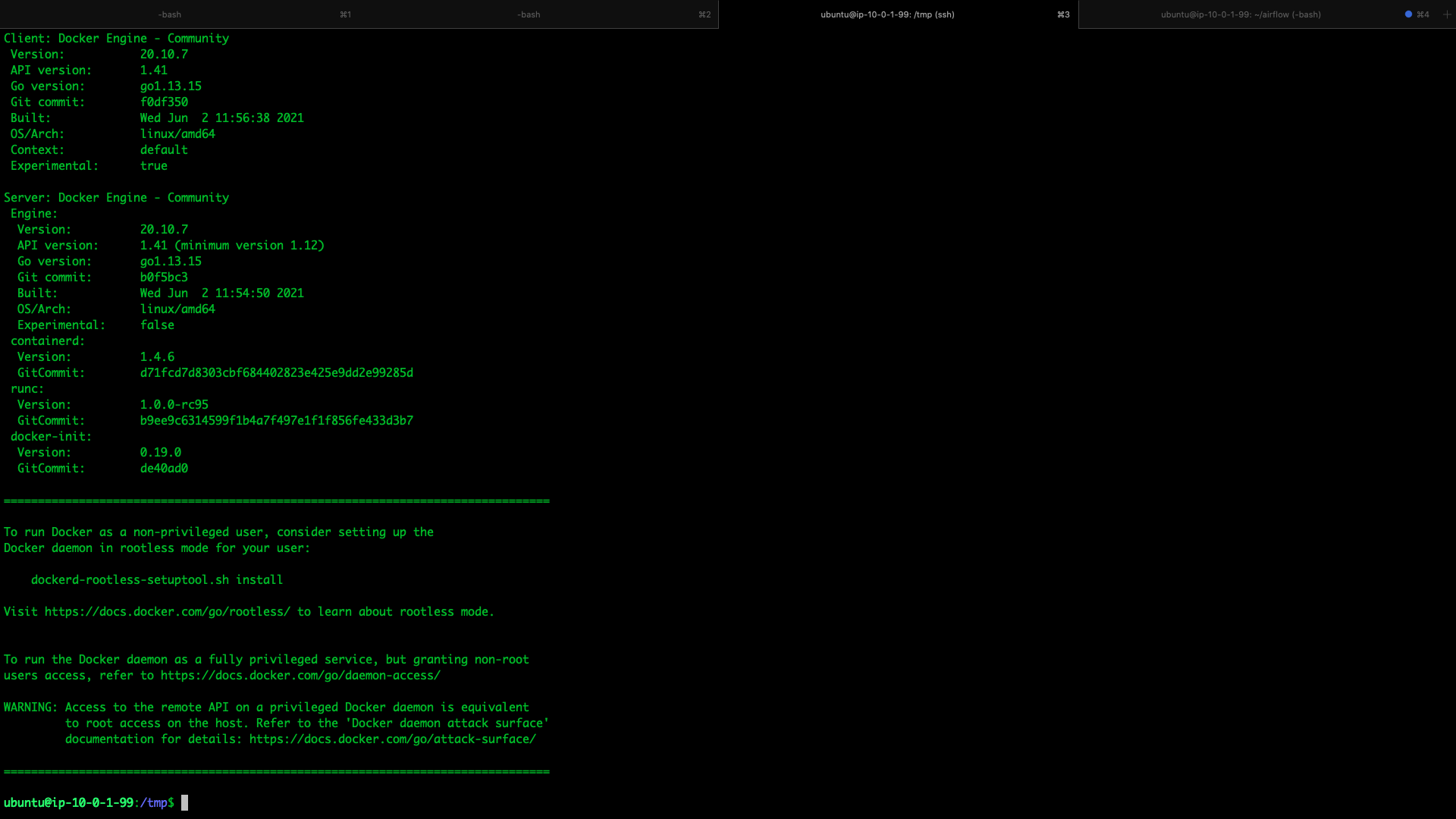

Docker 20.10.7

- Install docker 20.10.7

cd /tmp && curl -fsSL https://get.docker.com -o get-docker.sh && sh get-docker.sh

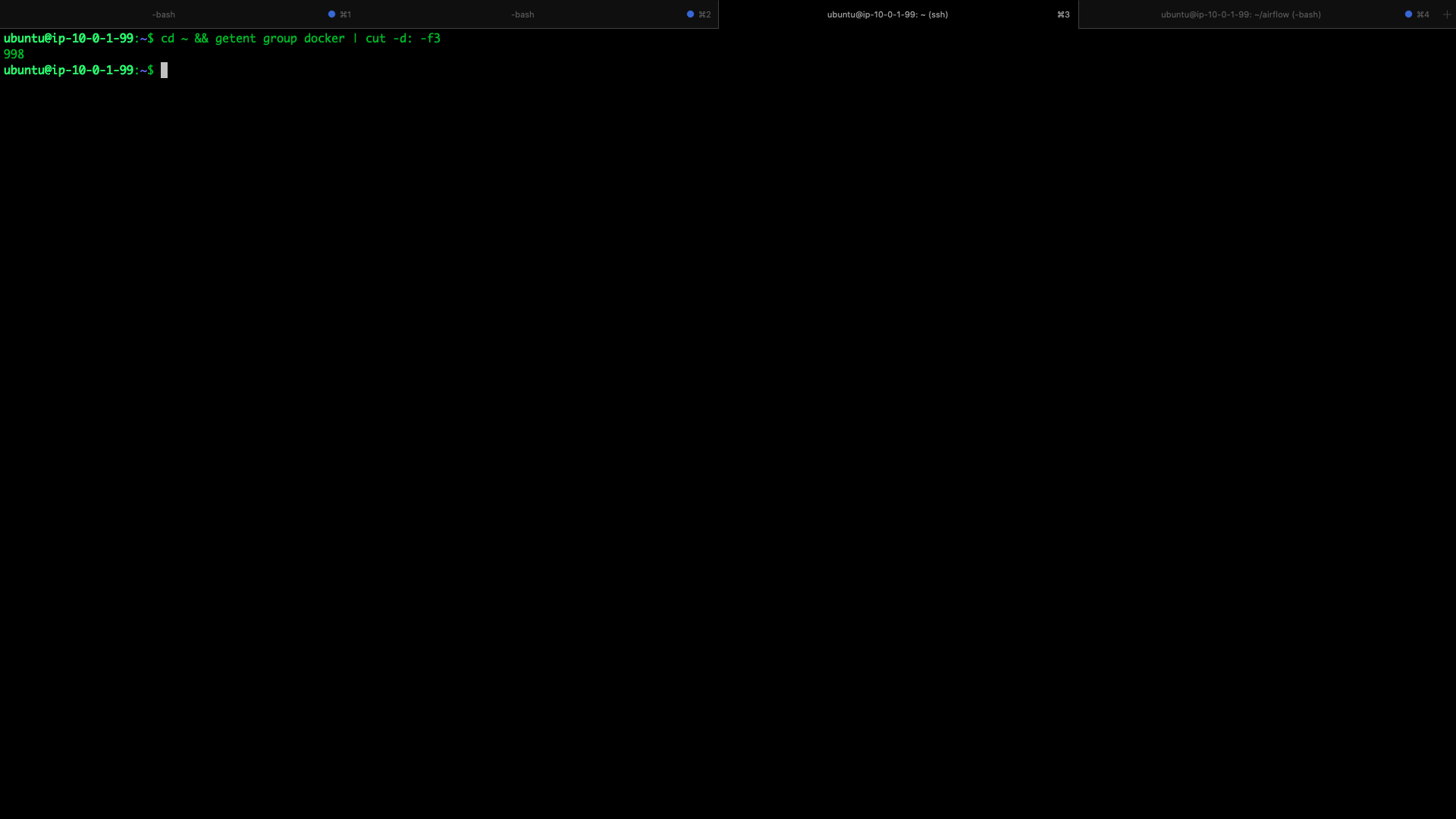

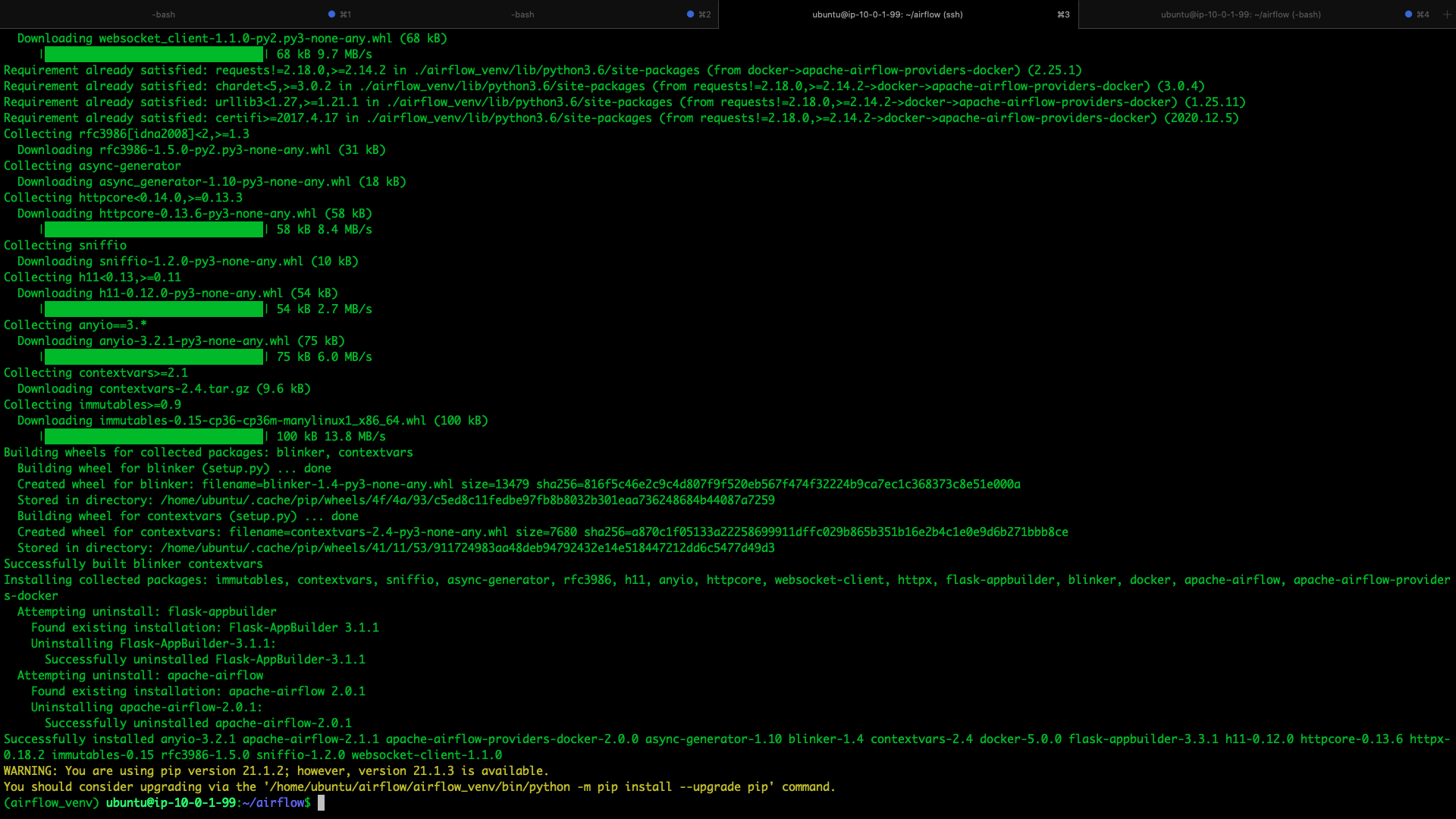

- Get the GID for the docker group

cd ~ && getent group docker | cut -d: -f3

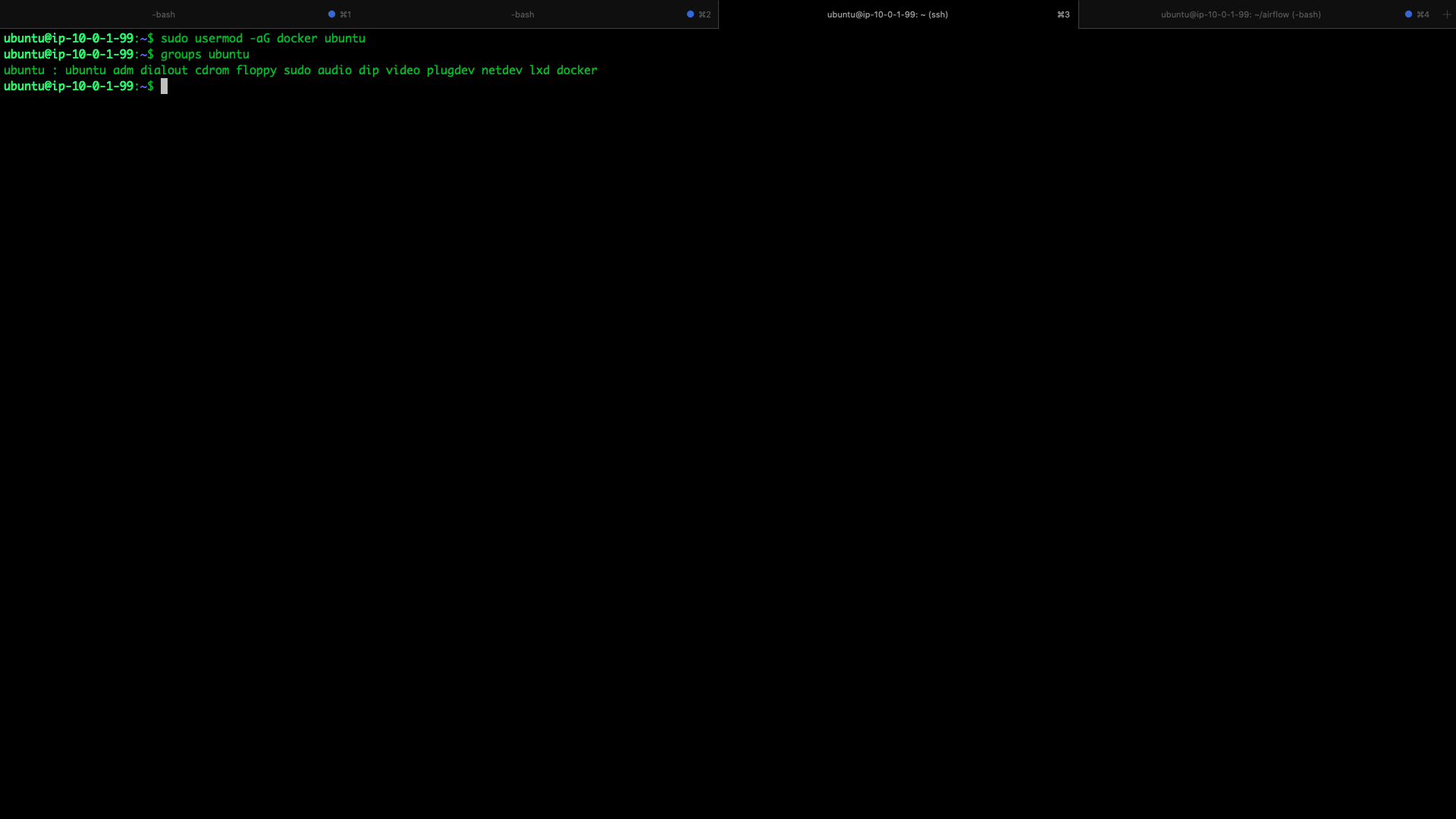

sudo usermod -aG docker ubuntugroups ubuntu

- Install requirements (you can also add this entry to your dags repo root in by

&& pip freeze > requirements.txtsucceeding the below command)

pip install apache-airflow-providers-docker

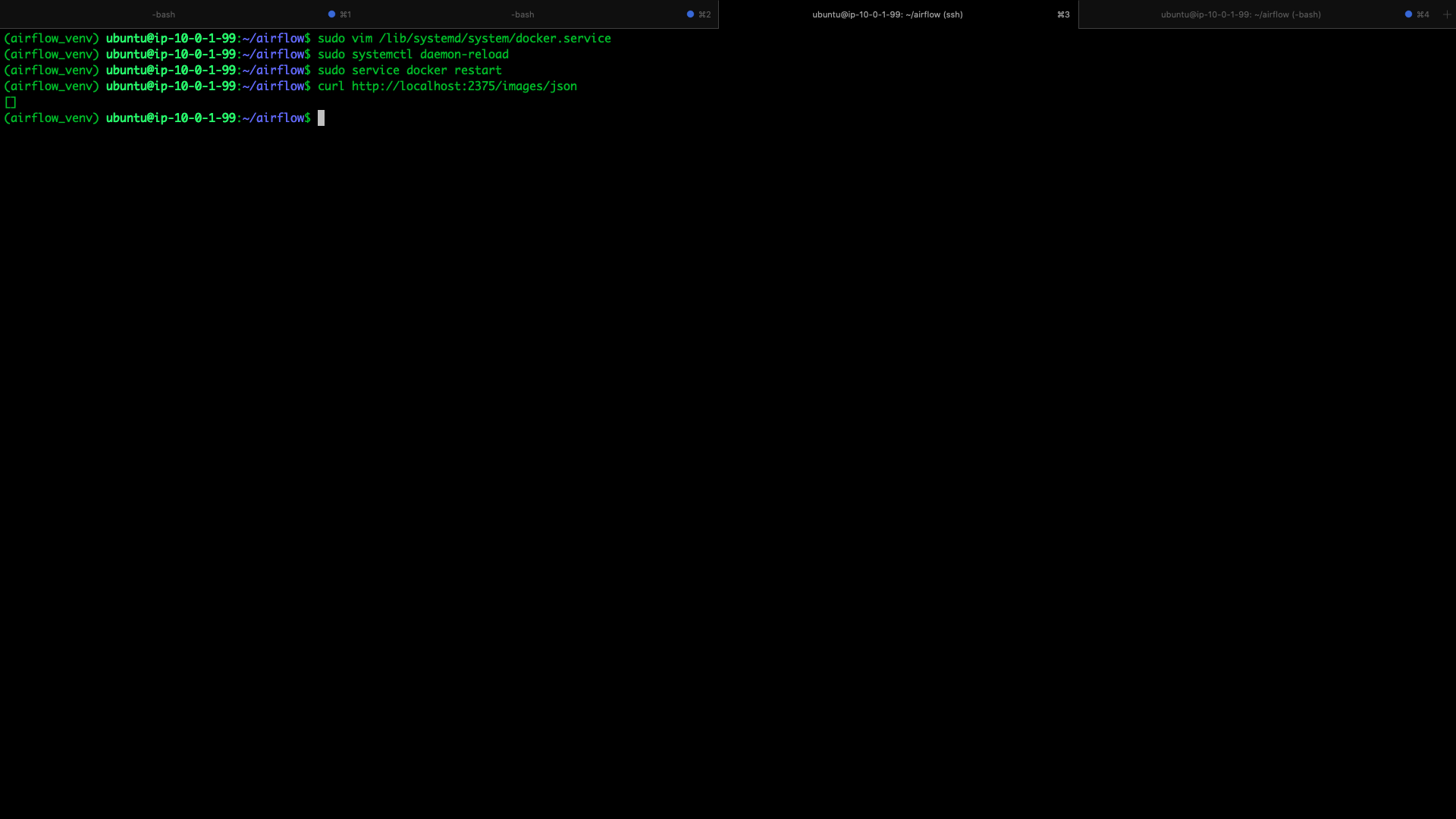

sudo vim /lib/systemd/system/docker.service# Comment the original line for backup to revert if needed#ExecStart=/usr/bin/dockerd -H fd:// --containerd=/run/containerd/containerd.sockExecStart=/usr/bin/dockerd -H fd:// -H 0.0.0.0:2375 --containerd=/run/containerd/containerd.sock- Reload - restart daemon and verify docker remote API response

sudo systemctl daemon-reloadsudo service docker restartcurl http://localhost:2375/images/json

- This completes docker 20.10.7 installation and setup

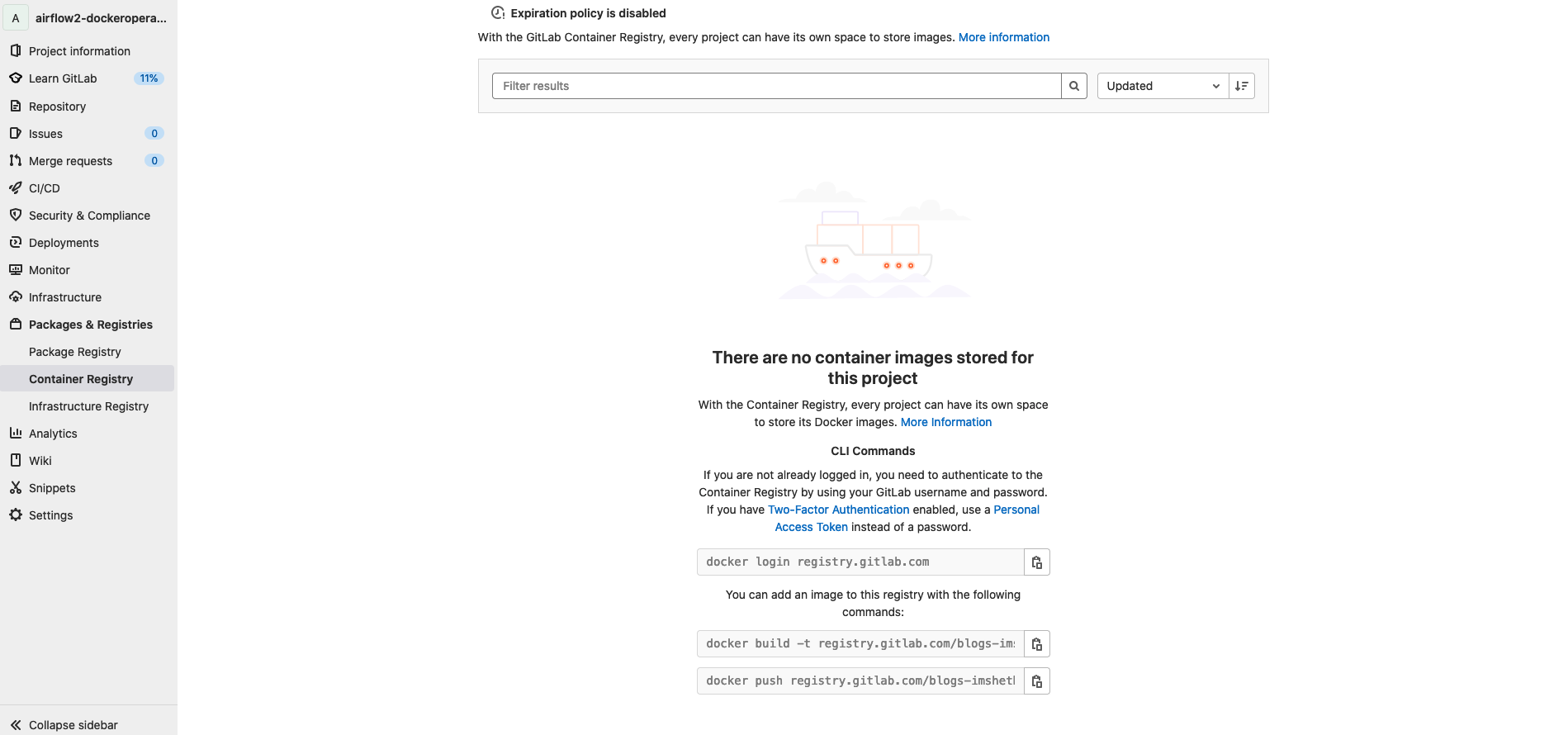

Gitlab container registry

- About Gitlab container registry

- This post uses connection to Gitlab container registry via docker remote API, without using connection from Airflow via the

docker_conn_idparameter

Sample URLs where you can find container registry for your repo based on the repo being used for the post

https://gitlab.com/blogs-imsheth-com/airflow2-dockeroperator-nodejs-gitlab/container_registry

https://gitlab.com/blogs-imsheth-com/airflow2-dockeroperator-nodejs-gitlab/-/settings/repository

- Create sample project in Gitlab on your local machine

Crete repo like

https://gitlab.com/blogs-imsheth-com/airflow2-dockeroperator-nodejs-gitlab

Add your deploy key at

https://gitlab.com/blogs-imsheth-com/airflow2-dockeroperator-nodejs-gitlab/-/settings/repository#js-deploy-keys-settings

Clone the repo

https://gitlab.com/blogs-imsheth-com/airflow2-dockeroperator-nodejs-gitlab

Copy paste sample code from

https://github.com/imsheth/airflow2-dockeroperator-nodejs-gitlab

Push to your repo

git push origin master

sudo docker login registry.gitlab.com

WARNING! Your password will be stored unencrypted in /root/.docker/config.json.Configure a credential helper to remove this warning. Seehttps://docs.docker.com/engine/reference/commandline/login/#credentials-store

Login Succeeded- Copy and update config json access on your local machine (this step also needs to be done on the server)

mkdir ~/.docker && sudo cp /root/.docker/config.json ~/.docker/config.json && sudo cat ~/.docker/config.json && sudo chmod 777 ~/.docker/config.json- Publish container to Gitlab from local from the root where Dockerfile is present (this will need the local docker daemon to be running, if not already then start it)

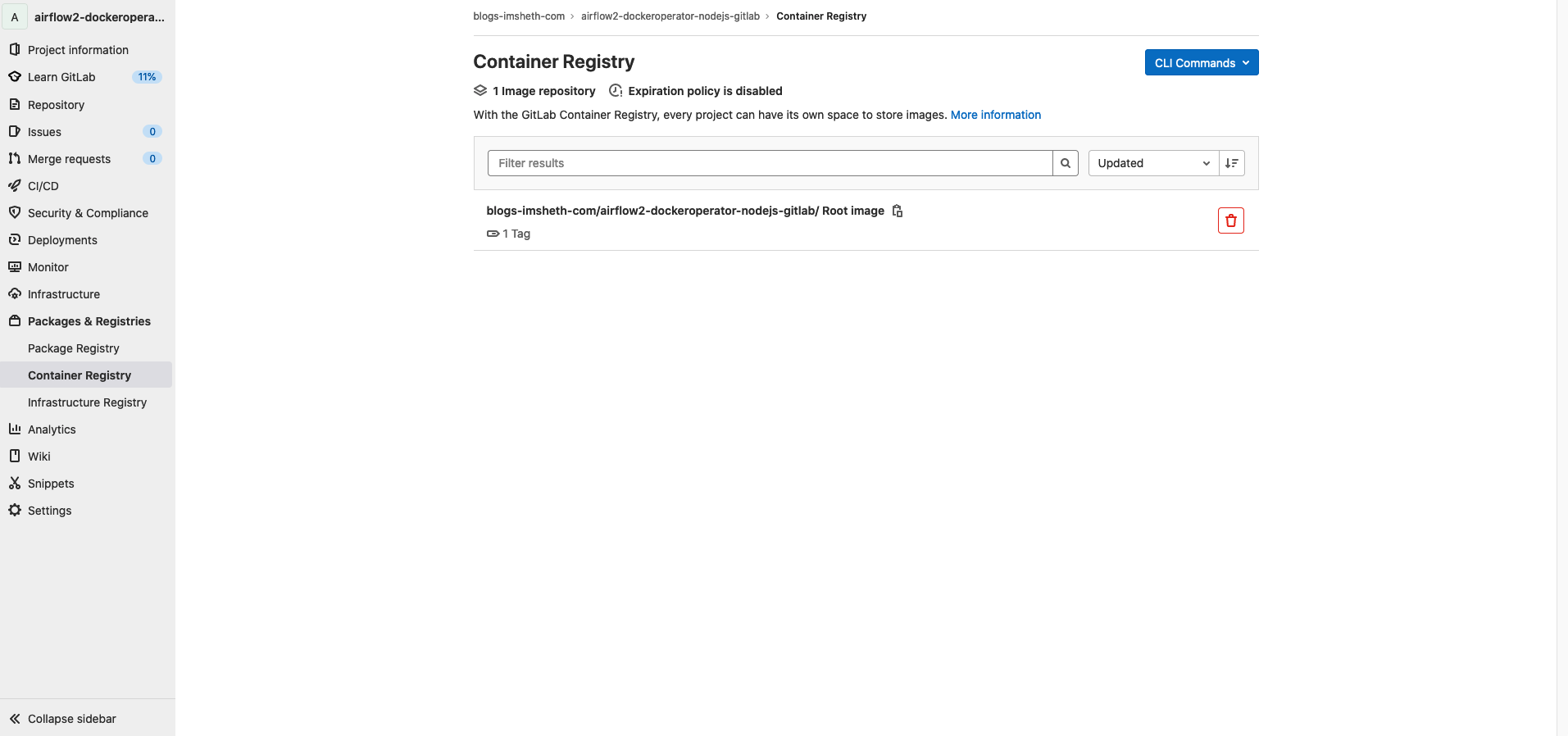

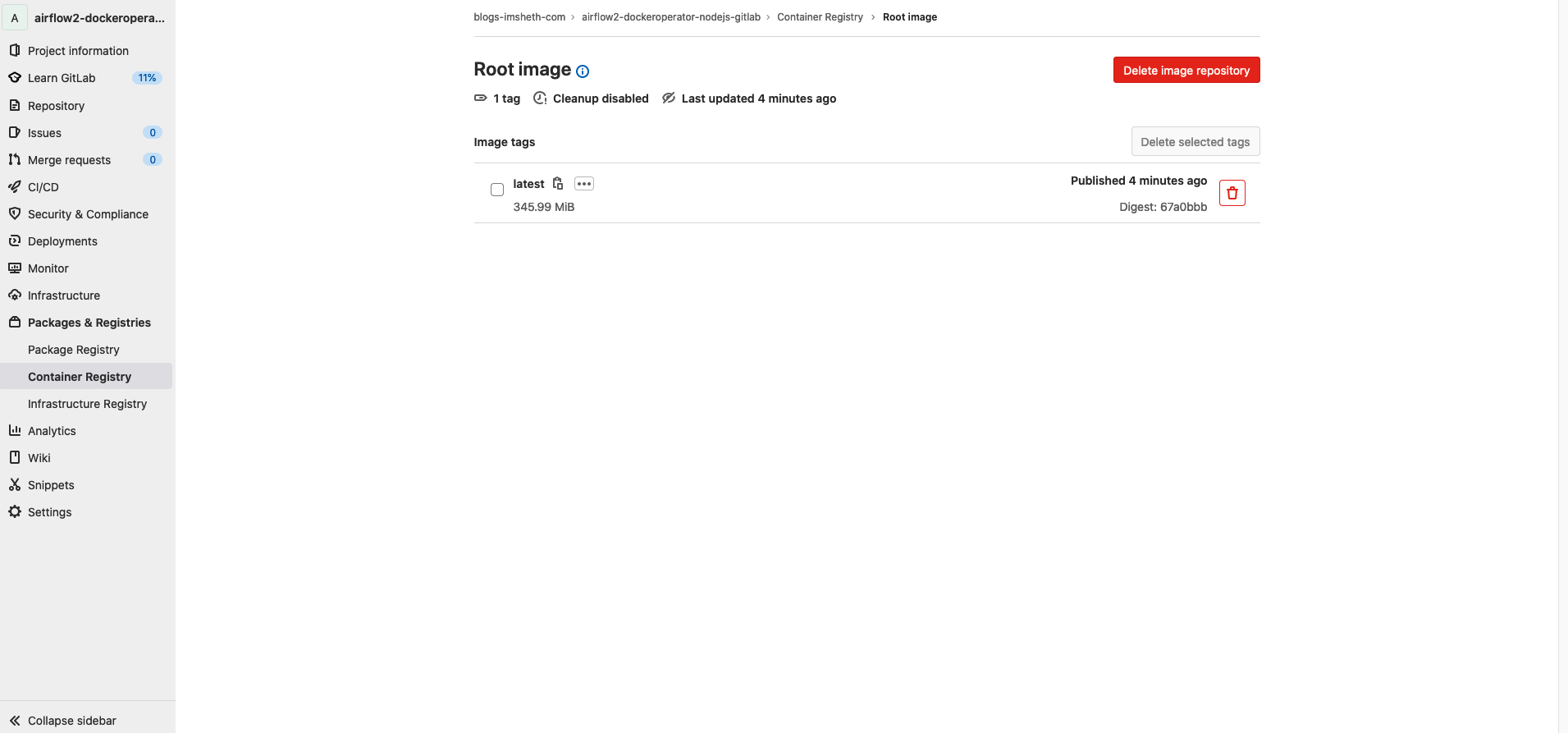

sudo docker build -t registry.gitlab.com/blogs-imsheth-com/airflow2-dockeroperator-nodejs-gitlab . && sudo docker push registry.gitlab.com/blogs-imsheth-com/airflow2-dockeroperator-nodejs-gitlab

- This completes Gitlab container registry installation and setup

Airflow DAGs

- DAGs will run the nodejs code

- Sample DAG for DockerOperator

- Clone your repo on server, source for current post can be found here

- Activate virtual environment, clone at proper path, install dependencies

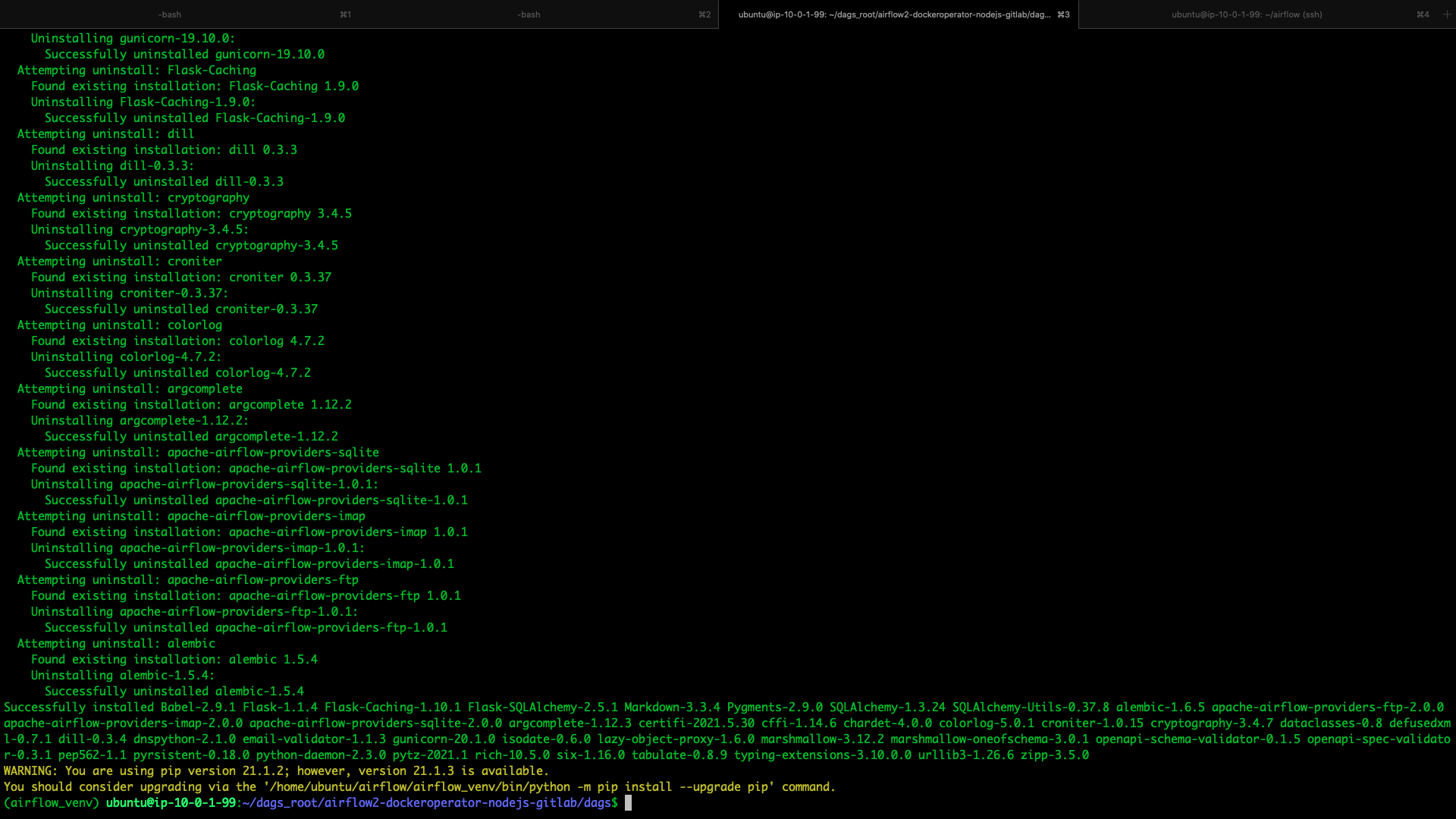

cd airflow && source airflow_venv/bin/activatecd ~/dags_root/ && git clone https://github.com/imsheth/airflow2-dockeroperator-nodejs-gitlab && cd airflow2-dockeroperator-nodejs-gitlab/dags && pip install -r requirements.txt

Your DAG is now available, first enable/unpause before triggering and then trigger it

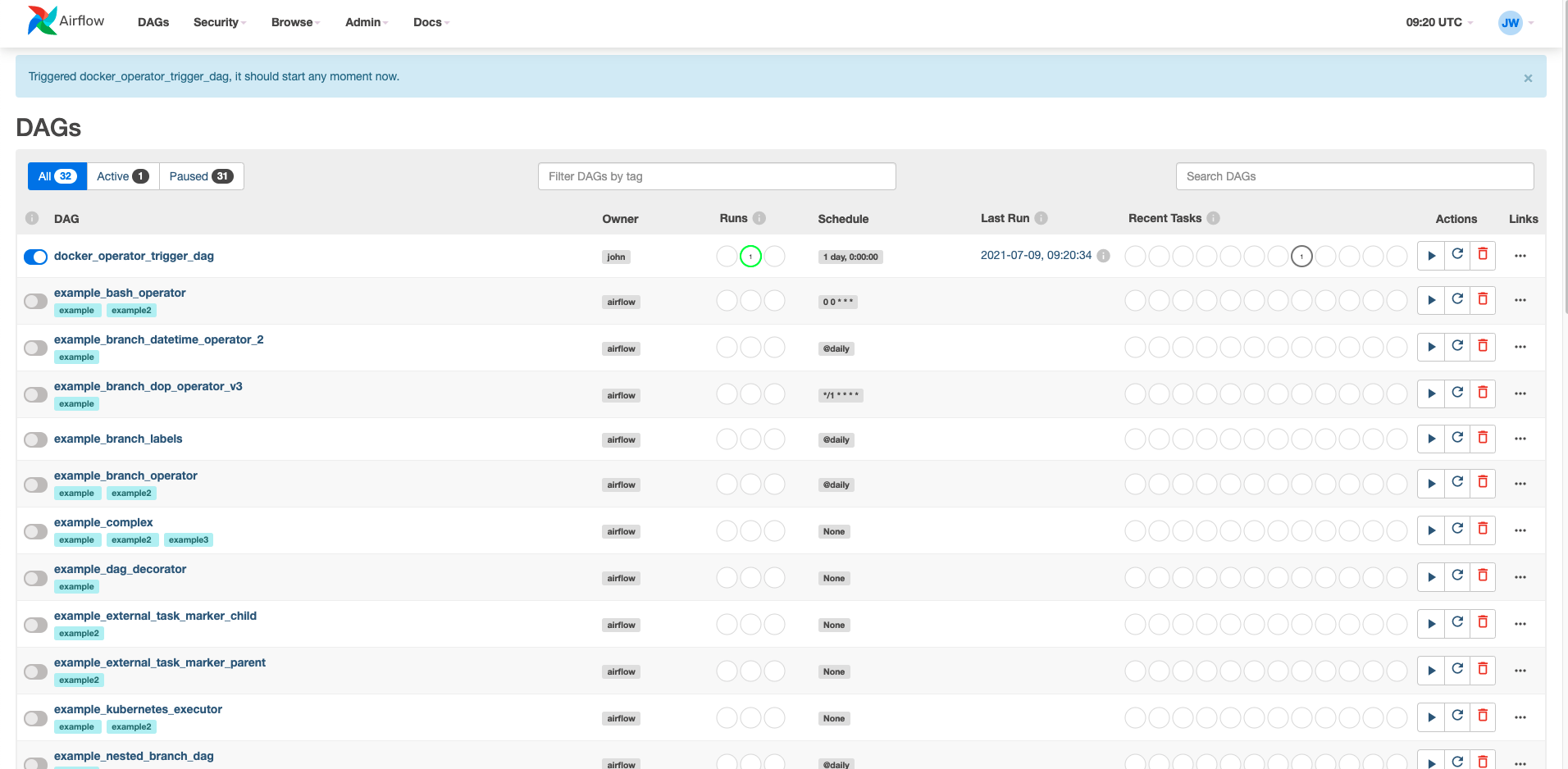

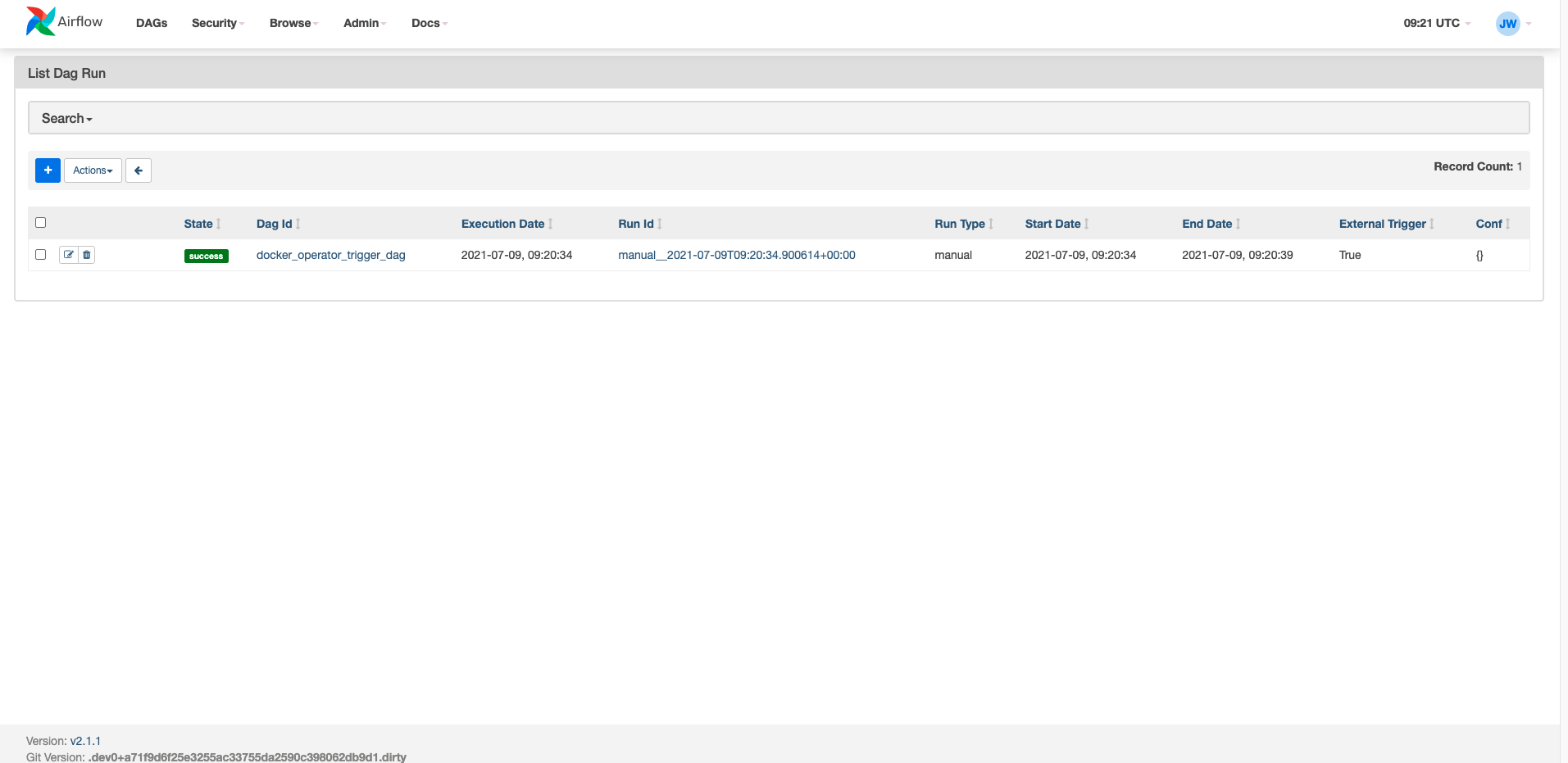

Trigger your DAG run

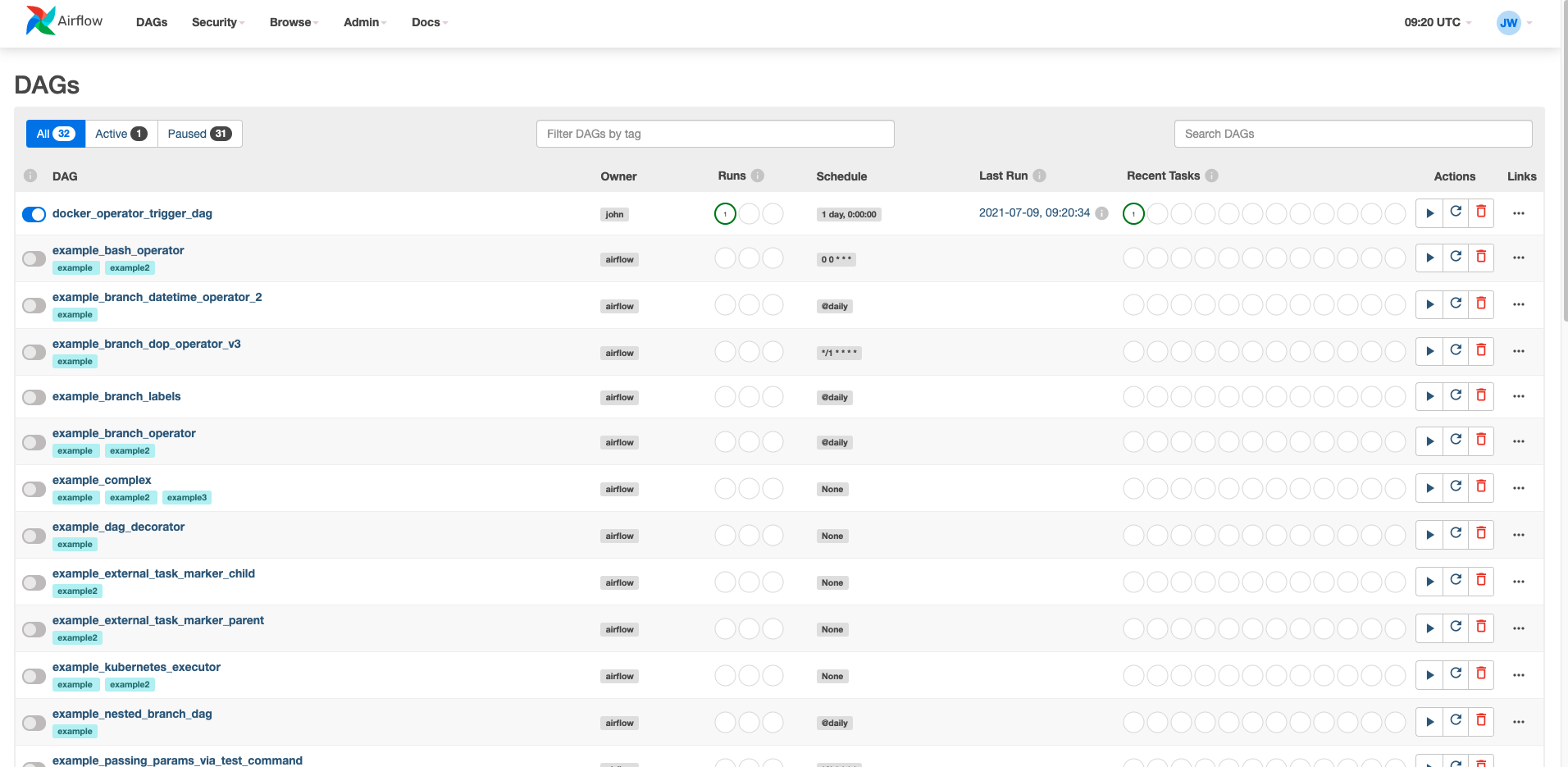

DAG run successful

DAG run details for the successful run

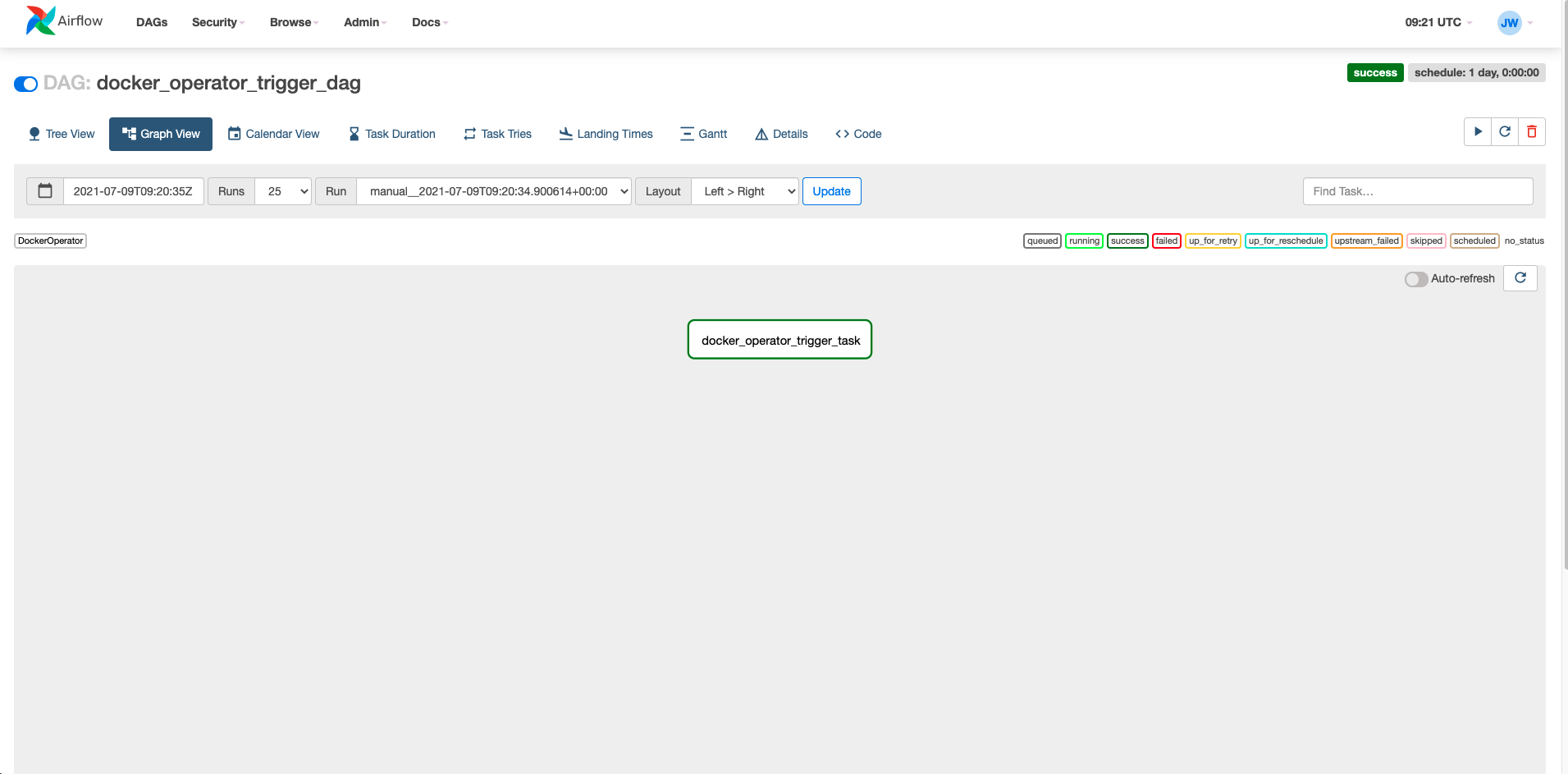

DAG run task details graph view for the successful run

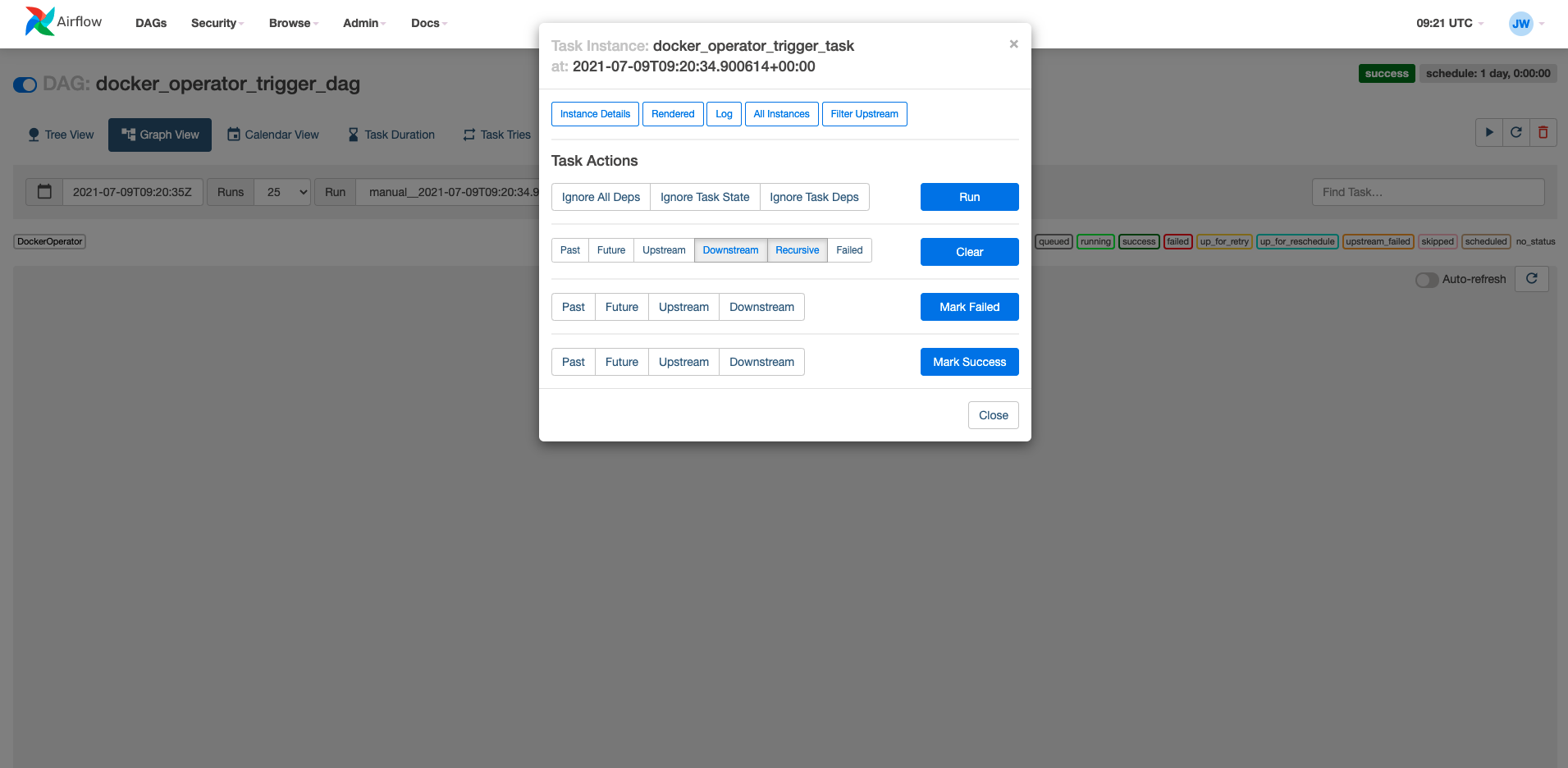

Task detail from DAG run task details graph view for the successful run

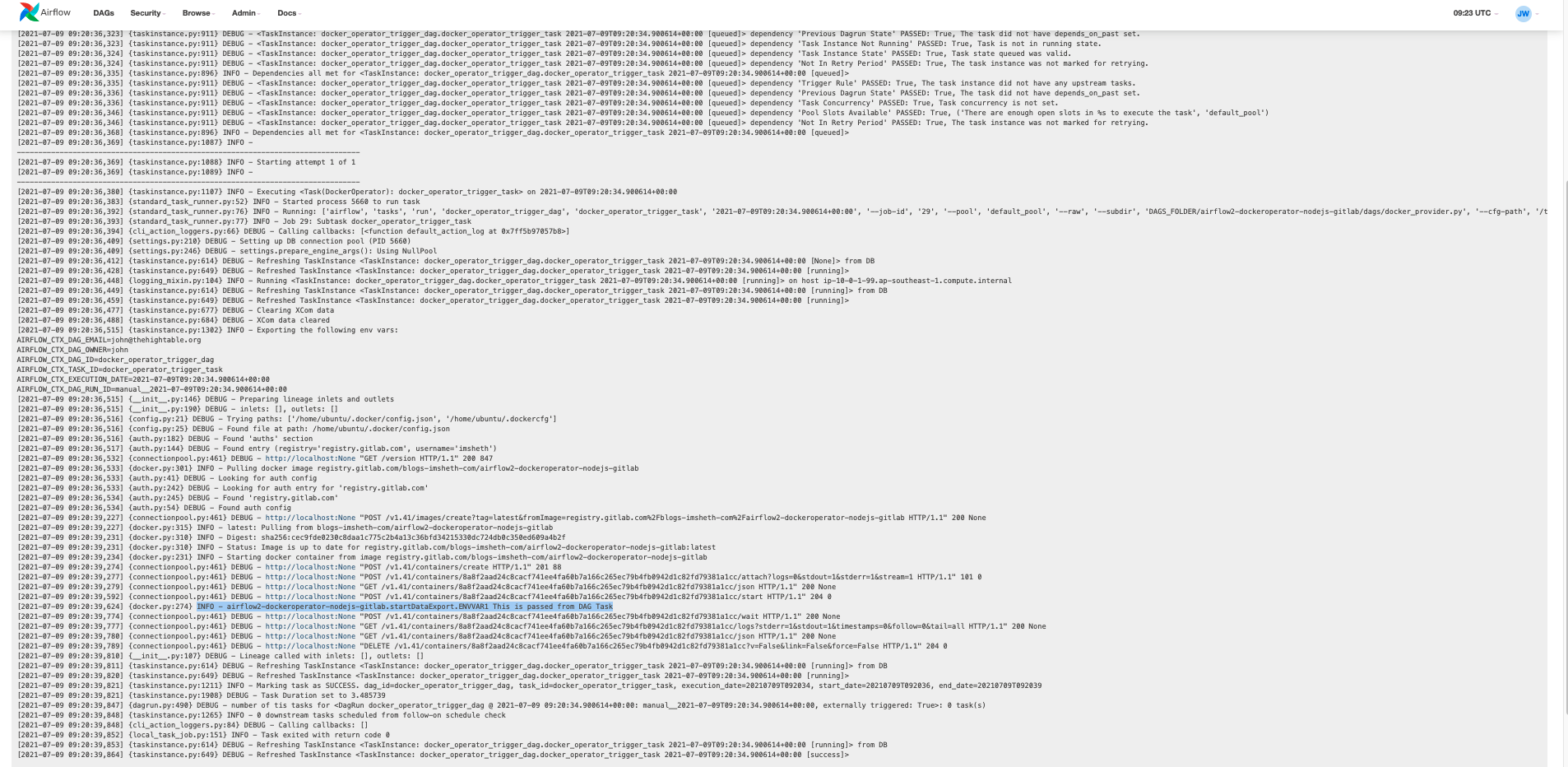

Task log from DAG run task details graph view for the successful run

This completes DAG run with DockerOperator in Airflow, however keep in mind that minimum AWS EC2 t2.medium equivalent instance will be required on the server to just run the DAGs with DockerOperator in Airflow

systemd service

- Sample scripts

- Running Apache-Airflow as a service

- Running Apache-Airflow as a service in virtual environment

- systemd manual

- Running Apache-Airflow as a service in virtual environment

- systemd unit doesn't interpolate variables and it will ignore lines starting with "export" in .bashrc

- systemd unit doesn't interpolate variables and it will ignore lines starting with "export" in .bashrc, which causes the issue on server restart as the variables are not exported (add the following to a startup script)

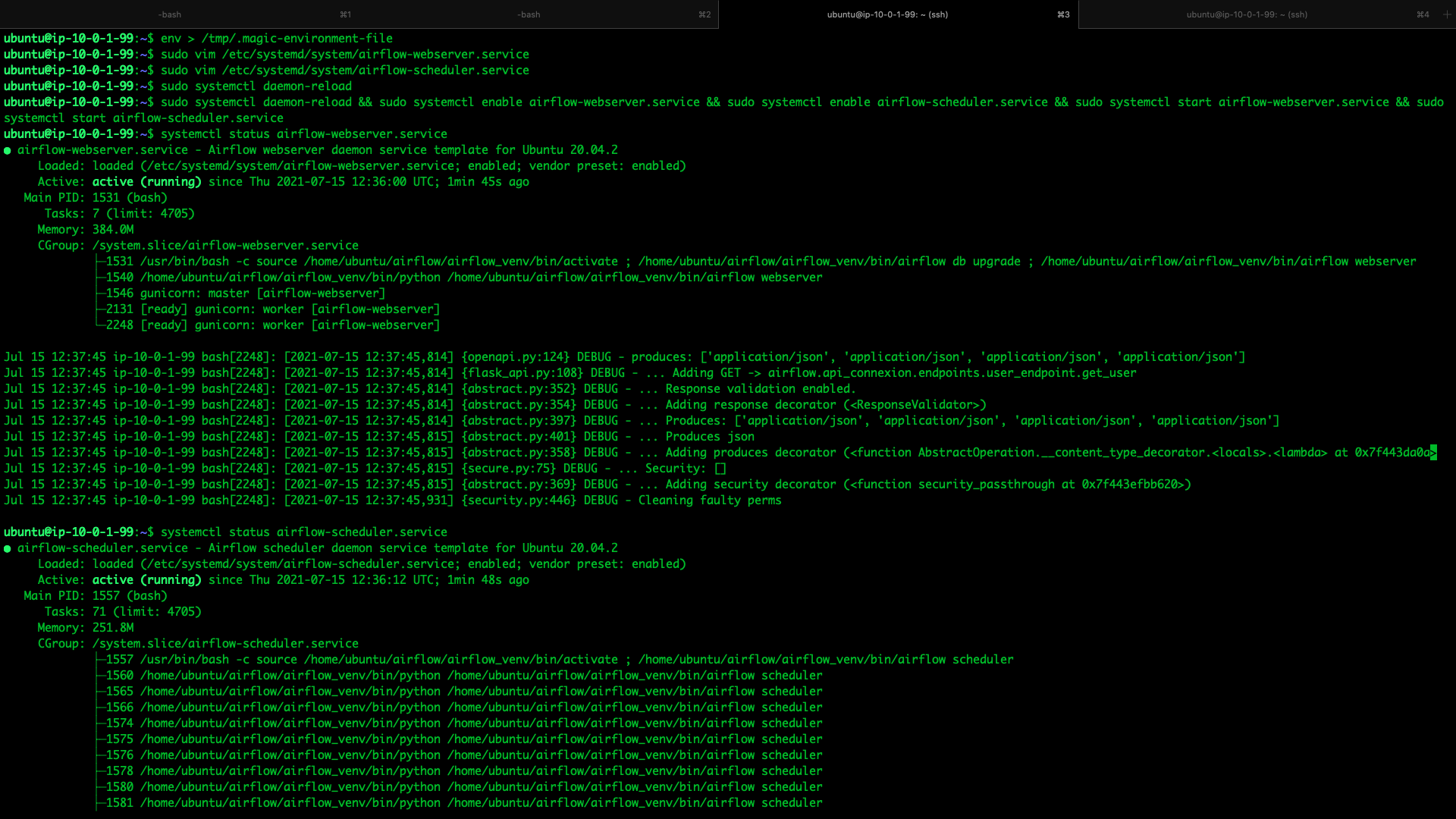

env > /tmp/.magic-environment-filesudo vim /etc/systemd/system/airflow-webserver.service[Unit]

Description=Airflow webserver daemon service template for Ubuntu 20.04.2

After=network.target postgresql.service

Wants=postgresql.service

[Service]

#PIDFile=/run/airflow/webserver.pid

User=ubuntu

Group=ubuntu

Type=simple

EnvironmentFile=-/tmp/.magic-environment-file

ExecStart=/usr/bin/bash -c 'source /home/ubuntu/airflow/airflow_venv/bin/activate ; /home/ubuntu/airflow/airflow_venv/bin/airflow db upgrade ; /home/> ubuntu/airflow/airflow_venv/bin/airflow webserver'

Restart=on-failure

RestartSec=60s

PrivateTmp=true

[Install]

WantedBy=multi-user.target

sudo vim /etc/systemd/system/airflow-scheduler.service[Unit]

Description=Airflow scheduler daemon service template for Ubuntu 20.04.2

After=network.target postgresql.service

Wants=postgresql.service

[Service]

#PIDFile=/run/airflow/scheduler.pid

User=ubuntu

Group=ubuntu

Type=simple

EnvironmentFile=-/tmp/.magic-environment-file

ExecStart=/usr/bin/bash -c 'source /home/ubuntu/airflow/airflow_venv/bin/activate ; /home/ubuntu/airflow/airflow_venv/bin/airflow scheduler'

Restart=on-failure

RestartSec=60s

PrivateTmp=true

[Install]

WantedBy=multi-user.target

- Reload daemon, enable and start services

sudo systemctl daemon-reload && sudo systemctl enable airflow-webserver.service && sudo systemctl enable airflow-scheduler.service && sudo systemctl start airflow-webserver.service && sudo systemctl start airflow-scheduler.service- Check service status

systemctl status airflow-webserver.service

- Check service status

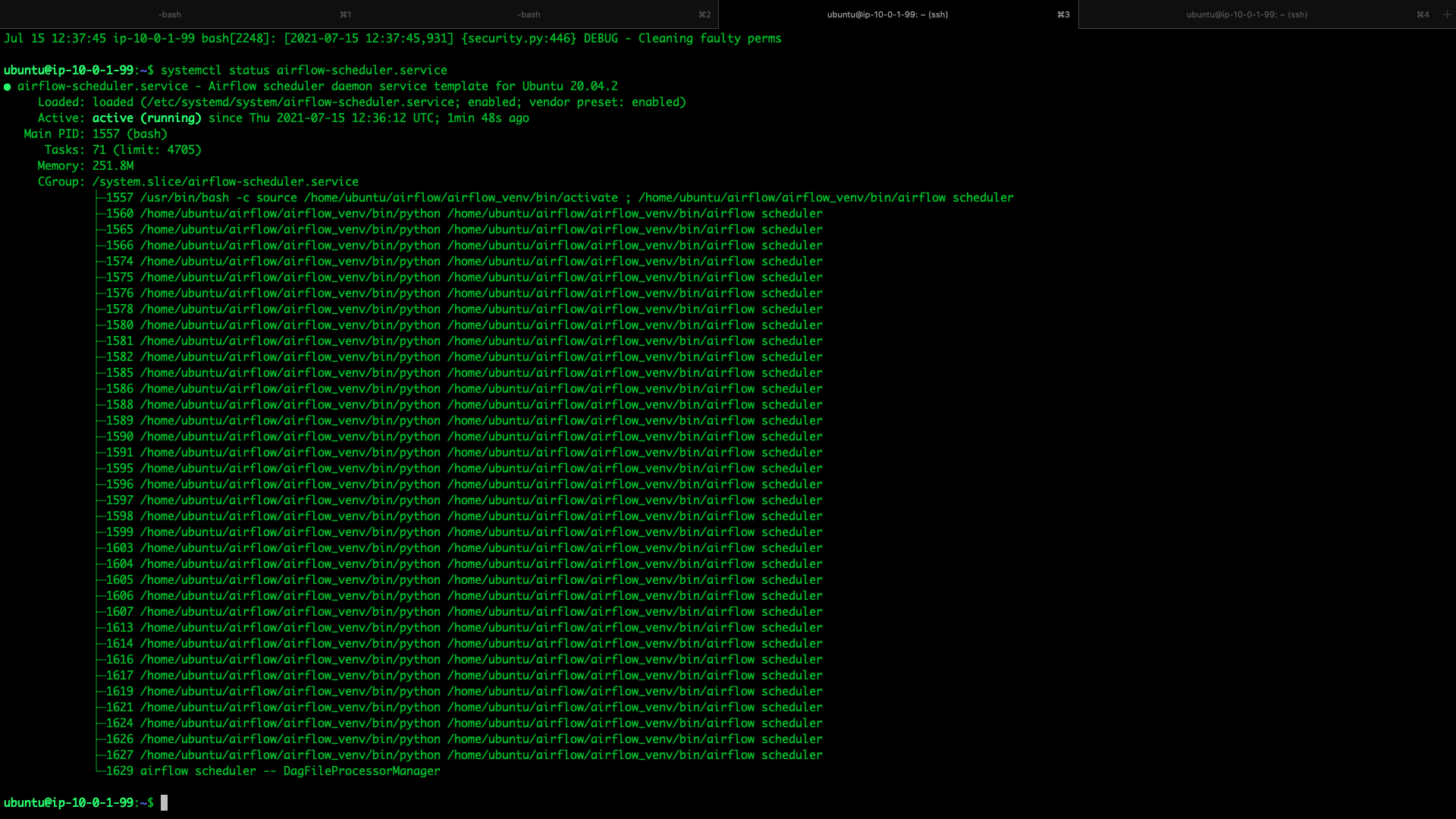

systemctl status airflow-scheduler.service

- Checking sysetmd logs

journalctl -xejournalctl -u airflow-webserver.service -e- Check live logs

journalctl -u airflow-webserver.service -e -fDeployment

- This command can be added to your deployment pipeline

cd /home/ubuntu/dags_root/airflow2-dockeroperator-nodejs-gitlab/dags && source /home/ubuntu/airflow/airflow_venv/bin/activate && git pull && pip install -r /home/ubuntu/dags_root/airflow2-dockeroperator-nodejs-gitlab/dags/requirements.txt && sudo systemctl stop airflow-webserver.service && sudo systemctl start airflow-webserver.service && sudo systemctl stop airflow-scheduler.service && sudo systemctl start airflow-scheduler.serviceUnsuccessful trials

- Trials with airflow2 inside docker

- https://airflow.apache.org/docs/apache-airflow/stable/docker-compose.yaml with edits for permissions for docker installation, which failed miserably

- https://stackoverflow.com/questions/41381350/docker-compose-installing-requirements-txt

- https://stackoverflow.com/questions/45211594/running-a-custom-script-using-entrypoint-in-docker-compose

- Trials with airflow2 inside docker with DockerOperator (docker inside docker)

- Referred initially but not used fully

- https://vujade.co/install-apache-airflow-ubuntu-18-04/

- https://towardsdatascience.com/setting-up-apache-airflow-2-with-docker-e5b974b37728

Miscellaneous

- Purging all unused or dangling images, containers, volumes, and networks. This was used in research and development phase

docker system prune -af

docker images -adocker container lsdocker ps -aSample at https://github.com/imsheth/airflow2-dockeroperator-nodejs-gitlab/blob/master/dags/docker_provider.py

force_pull = True

xcom_all = True

auto_remove = True

tty = True

- SSH for deployment

ssh-keygen -t rsa -b 2048 -C "john@thehightable.org"- Add to repo for deploy keys

cat id_rsa.pub- For running DAGs with DockerOperator

- Docker for Mac doesn't listen on 2375

- docker.from_env() causes TypeError: load_config() got an unexpected keyword argument 'config_dict'

- Gitlab registry

- docker remote API

ExecStart=/usr/bin/dockerd -H fd:// -H 0.0.0.0:2375 --containerd=/run/containerd/containerd.sockDOCKER_GROUP_ID=`getent group docker | cut -d: -f3` sudo docker-compose up -ddocker run -d -v /var/run/docker.sock:/var/run/docker.sock -p 127.0.0.1:2375:2375 bobrik/socat TCP-LISTEN:2375,fork UNIX-CONNECT:/var/run/docker.sock- macOS issues

- Negsignal.SIGKILL error on macOS

- Airflow task running tweepy exits with return code -6

- Multiprocessing causes Python to crash and gives an error may have been in progress in another thread when fork() was called

- Check docker container logs

sudo docker pssudo docker exec -it 82f4b968cb6d shsudo docker-compose logs --tail="all" -f#airflow #airflow2 #apacheairflow #apacheairflow2.0.1 #dockeroperator #docker #python #python3.6 #pip #pip20.2.4 #ubuntu #ubuntu20.04.2lts #tech

Edit this page on GitHub